Study 4: Extroversion Data Analysis

Full Model Prior-Posterior Sensitivity Part 1

R. Noah Padgett

2022-01-17

Last updated: 2022-02-15

Checks: 4 2

Knit directory: Padgett-Dissertation/

This reproducible R Markdown analysis was created with workflowr (version 1.6.2). The Checks tab describes the reproducibility checks that were applied when the results were created. The Past versions tab lists the development history.

Great job! The global environment was empty. Objects defined in the global environment can affect the analysis in your R Markdown file in unknown ways. For reproduciblity it’s best to always run the code in an empty environment.

The command set.seed(20210401) was run prior to running the code in the R Markdown file. Setting a seed ensures that any results that rely on randomness, e.g. subsampling or permutations, are reproducible.

Great job! Recording the operating system, R version, and package versions is critical for reproducibility.

- base-base

- model4-alt-a-alt-a

- model4-alt-a-alt-b

- model4-alt-a-alt-c

- model4-alt-a-alt-d

- model4-alt-a-base

- model4-alt-b-alt-a

- model4-alt-b-alt-b

- model4-alt-b-alt-c

- model4-alt-b-alt-d

- model4-alt-b-base

- model4-alt-c-alt-a

- model4-alt-c-alt-b

- model4-alt-c-alt-c

- model4-alt-c-alt-d

- model4-alt-c-base

- model4-alt-d-alt-a

- model4-alt-d-alt-b

- model4-alt-d-alt-c

- model4-alt-d-alt-d

- model4-alt-d-base

- model4-alt-e-alt-a

- model4-alt-e-alt-b

- model4-alt-e-alt-c

- model4-alt-e-alt-d

- model4-alt-e-base

- model4-alt-f-alt-a

- model4-alt-f-alt-b

- model4-alt-f-alt-c

- model4-alt-f-alt-d

- model4-alt-f-base

- model4-alt-g-alt-a

- model4-alt-g-alt-b

- model4-alt-g-alt-c

- model4-alt-g-alt-d

- model4-alt-g-base

- model4-base-alt-a

- model4-base-alt-b

- model4-base-alt-c

- model4-base-alt-d

- model4-code

- model4-post-prior-comp

- model4-spec-alt-a

- model4-spec-alt-b

- model4-spec-alt-c

- model4-spec-alt-d

- model4-spec-alt-e

- model4-spec-alt-f

- model4-spec-alt-g

- model4-spec-compare

To ensure reproducibility of the results, delete the cache directory study4_posterior_sensitivity_analysis_part4_cache and re-run the analysis. To have workflowr automatically delete the cache directory prior to building the file, set delete_cache = TRUE when running wflow_build() or wflow_publish().

Great job! Using relative paths to the files within your workflowr project makes it easier to run your code on other machines.

Tracking code development and connecting the code version to the results is critical for reproducibility. To start using Git, open the Terminal and type git init in your project directory.

This project is not being versioned with Git. To obtain the full reproducibility benefits of using workflowr, please see ?wflow_start.

# Load packages & utility functions

source("code/load_packages.R")

source("code/load_utility_functions.R")

# environment options

options(scipen = 999, digits=3)

library(diffIRT)

data("extraversion")

mydata <- na.omit(extraversion)

# model constants

# Save parameters

jags.params <- c("tau",

"lambda","lambda.std",

"theta",

"icept",

"prec",

"prec.s",

"sigma.ts",

"rho",

"reli.omega")

#data

jags.data <- list(

y = mydata[,1:10],

lrt = mydata[,11:20],

N = nrow(mydata),

nit = 10,

ncat = 2

)

NBURN = 5000

NITER = 10000Model 4: Full IFA with Misclassification

The code below contains the specification of the full model that has been used throughout this project.

cat(read_file(paste0(w.d, "/code/study_4/model_4.txt")))model {

### Model

for(p in 1:N){

for(i in 1:nit){

# data model

y[p,i] ~ dbern(omega[p,i,2])

# LRV

ystar[p,i] ~ dnorm(lambda[i]*eta[p], 1)

# Pr(nu = 2)

pi[p,i,2] = phi(ystar[p,i] - tau[i,1])

# Pr(nu = 1)

pi[p,i,1] = 1 - phi(ystar[p,i] - tau[i,1])

# log-RT model

dev[p,i]<-lambda[i]*(eta[p] - tau[i,1])

mu.lrt[p,i] <- icept[i] - speed[p] - rho * abs(dev[p,i])

lrt[p,i] ~ dnorm(mu.lrt[p,i], prec[i])

# MISCLASSIFICATION MODEL

for(c in 1:ncat){

# generate informative prior for misclassificaiton

# parameters based on RT

for(ct in 1:ncat){

alpha[p,i,ct,c] <- ifelse(c == ct,

ilogit(lrt[p,i]),

(1/(ncat-1))*(1-ilogit(lrt[p,i]))

)

}

# sample misclassification parameters using the informative priors

gamma[p,i,c,1:ncat] ~ ddirch(alpha[p,i,c,1:ncat])

# observed category prob (Pr(y=c))

omega[p,i, c] = gamma[p,i,c,1]*pi[p,i,1] +

gamma[p,i,c,2]*pi[p,i,2]

}

}

}

### Priors

# person parameters

for(p in 1:N){

eta[p] ~ dnorm(0, 1) # latent ability

speed[p]~dnorm(sigma.ts*eta[p],prec.s) # latent speed

}

sigma.ts ~ dnorm(0, 0.1)

prec.s ~ dgamma(.1,.1)

# transformations

sigma.t = pow(prec.s, -1) + pow(sigma.ts, 2) # speed variance

cor.ts = sigma.ts/(pow(sigma.t,0.5)) # LV correlation

for(i in 1:nit){

# lrt parameters

icept[i]~dnorm(0,.1)

prec[i]~dgamma(.1,.1)

# Thresholds

tau[i, 1] ~ dnorm(0.0,0.1)

# loadings

lambda[i] ~ dnorm(0, .44)T(0,)

# LRV total variance

# total variance = residual variance + fact. Var.

theta[i] = 1 + pow(lambda[i],2)

# standardized loading

lambda.std[i] = lambda[i]/pow(theta[i],0.5)

}

rho~dnorm(0,.1)I(0,)

# compute omega

lambda_sum[1] = lambda[1]

for(i in 2:nit){

#lambda_sum (sum factor loadings)

lambda_sum[i] = lambda_sum[i-1]+lambda[i]

}

reli.omega = (pow(lambda_sum[nit],2))/(pow(lambda_sum[nit],2)+nit)

}# omega simulator

prior_omega <- function(lambda, theta){

(sum(lambda)**2)/(sum(lambda)**2 + sum(theta))

}

# induced prior on omega is:

prior_lambda <- function(n){

y <- rep(-1, n)

for(i in 1:n){

while(y[i] < 0){

y[i] <- rnorm(1, 0, sqrt(1/.44))

}

}

return(y)

}

nsim=1000

sim_omega <- numeric(nsim)

for(i in 1:nsim){

lam_vec <- prior_lambda(10)

tht_vec <- rep(1, 10)

sim_omega[i] <- prior_omega(lam_vec, tht_vec)

}

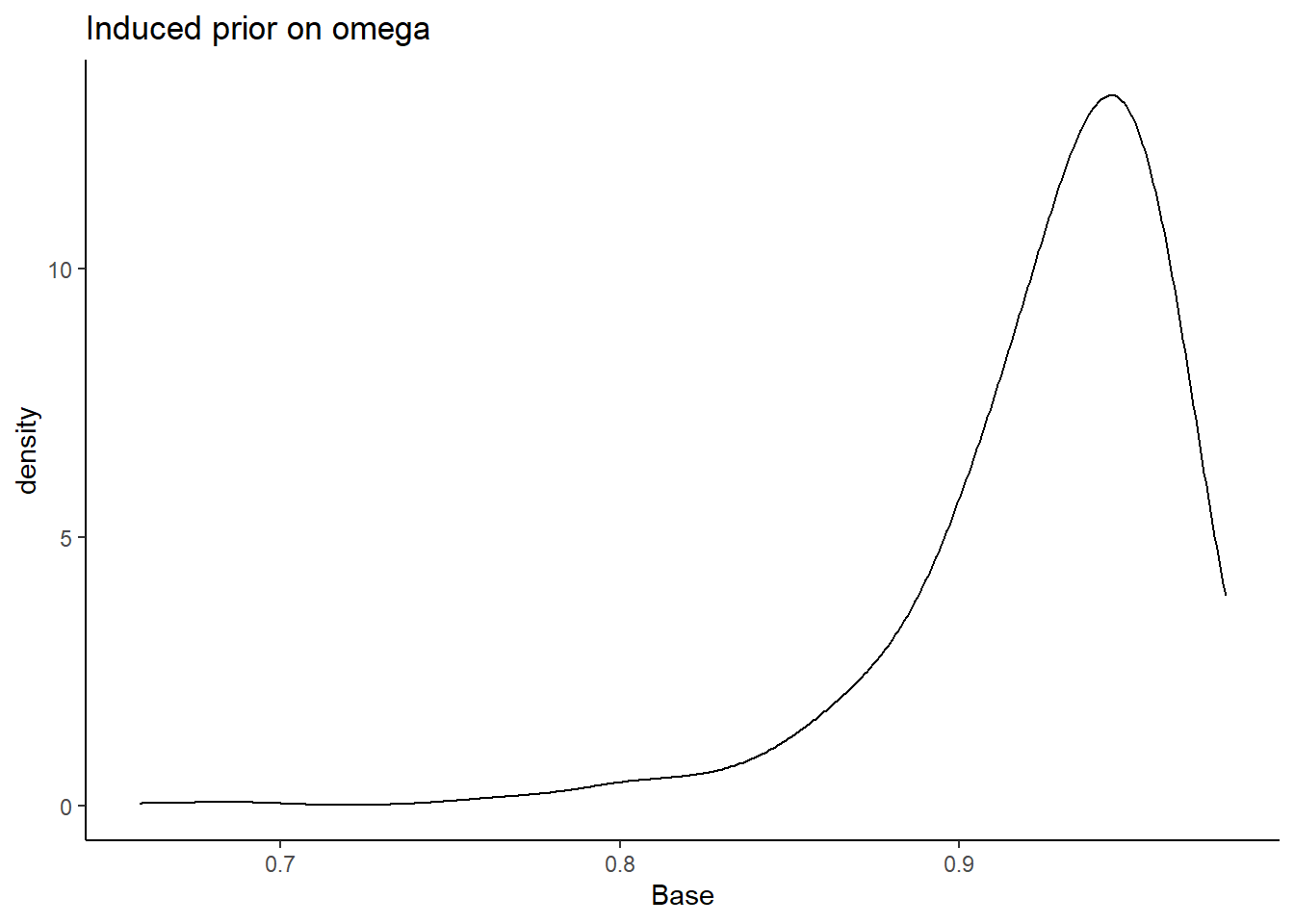

prior_data <- data.frame(Base=sim_omega)

ggplot(prior_data, aes(x=Base))+

geom_density(adjust=2)+

labs(title="Induced prior on omega")+

theme_classic()

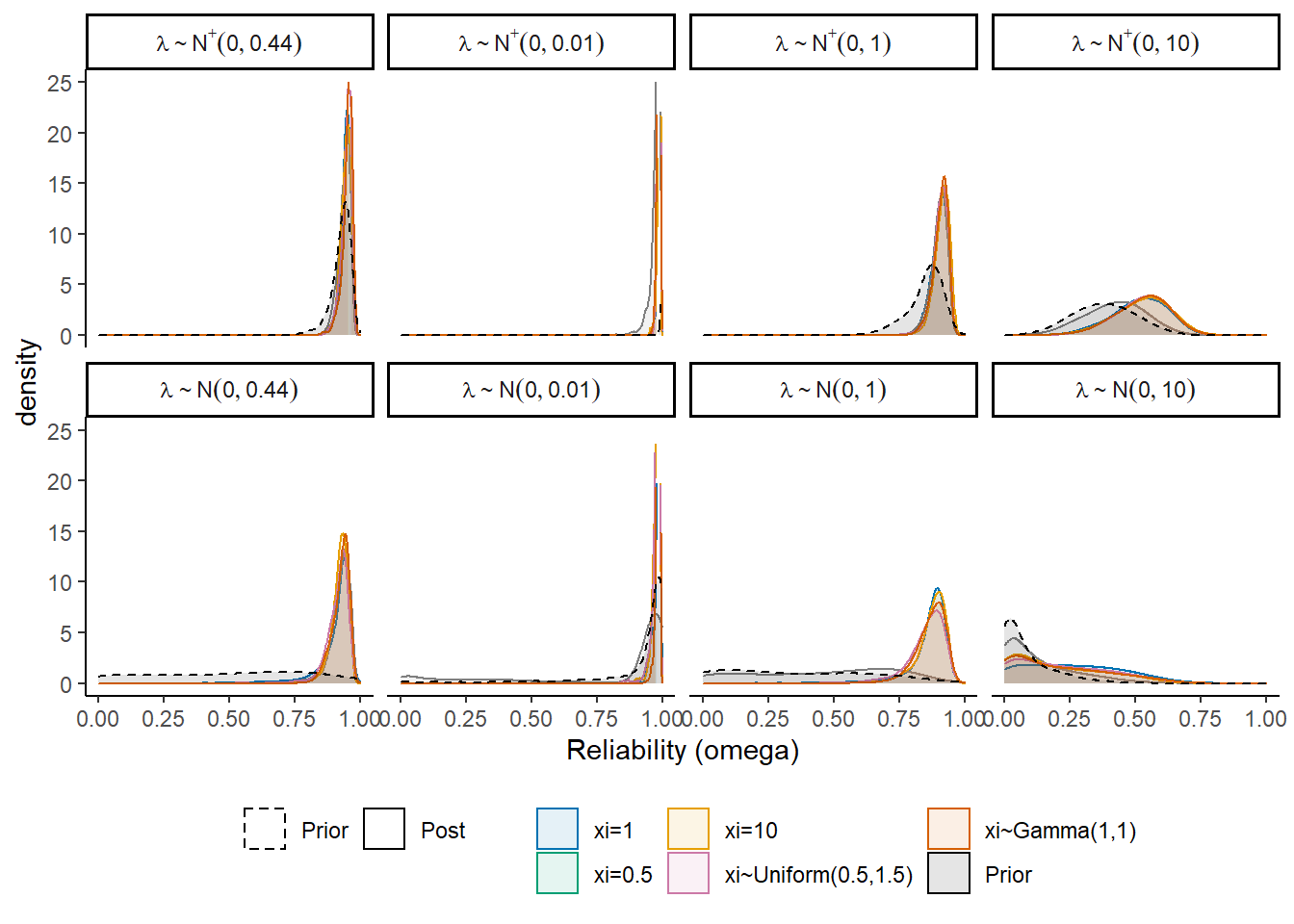

I will test the various different prior structures. The prior structure is very complex. There are many moving pieces in this posterior distribution and for this prior-posterior sensitivity analysis we will focus on the effects of prior specification on the posterior of \(\omega\) only.

The pieces are most likely to effect the posterior of \(\omega\) are the priors for the

factor loadings (\(\lambda\))

misclassification rates (\(\gamma\)) by tuning of misclassification

For each specification below, we will show the induced prior on \(\omega\).

Factor Loading Prior Alternatives

For the following investigations, the prior for the tuning parameter of misclassification rates is held constant at 1. The following major section will test how the tuning paramter incluences the results as well.

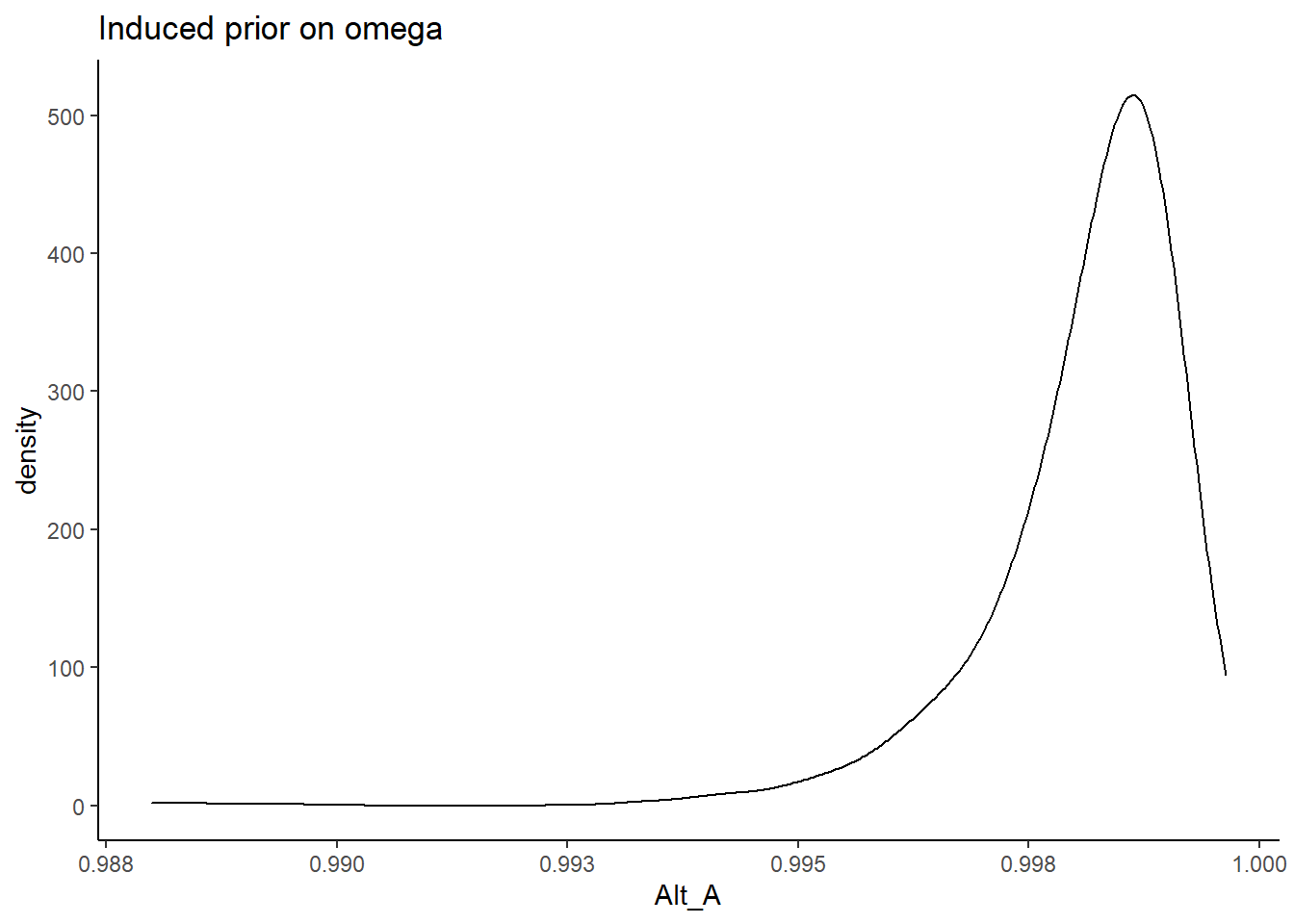

Alternative A

\[\lambda \sim N^+(0,.44) \Longrightarrow \lambda \sim N^+(0,.01)\] and remember, the variability is parameterized as the precision and not variance.

prior_lambda_A <- function(n){

y <- rep(-1, n)

for(i in 1:n){

while(y[i] < 0){

y[i] <- rnorm(1, 0, sqrt(1/.01))

}

}

return(y)

}

nsim=1000

sim_omega <- numeric(nsim)

for(i in 1:nsim){

lam_vec <- prior_lambda_A(10)

tht_vec <- rep(1, 10)

sim_omega[i] <- prior_omega(lam_vec, tht_vec)

}

prior_data$Alt_A <- sim_omega

ggplot(prior_data, aes(x=Alt_A))+

geom_density(adjust=2)+

labs(title="Induced prior on omega")+

theme_classic()

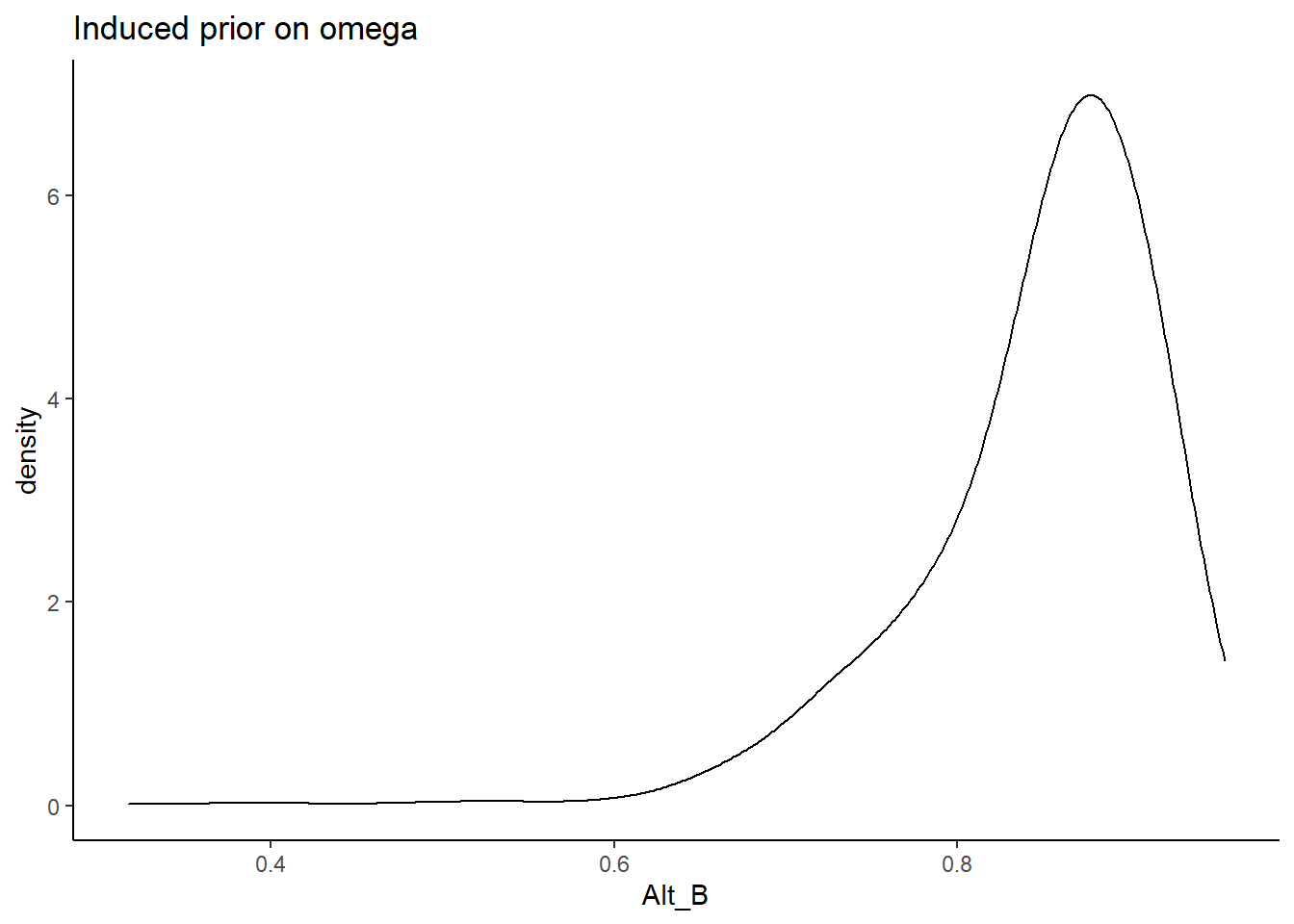

Alternative B

\[\lambda \sim N^+(0,.44) \Longrightarrow \lambda \sim N^+(0,1)\]

prior_lambda_B <- function(n){

y <- rep(-1, n)

for(i in 1:n){

while(y[i] < 0){

y[i] <- rnorm(1, 0, 1)

}

}

return(y)

}

sim_omega <- numeric(nsim)

for(i in 1:nsim){

lam_vec <- prior_lambda_B(10)

tht_vec <- rep(1, 10)

sim_omega[i] <- prior_omega(lam_vec, tht_vec)

}

prior_data$Alt_B <- sim_omega

ggplot(prior_data, aes(x=Alt_B))+

geom_density(adjust=2)+

labs(title="Induced prior on omega")+

theme_classic()

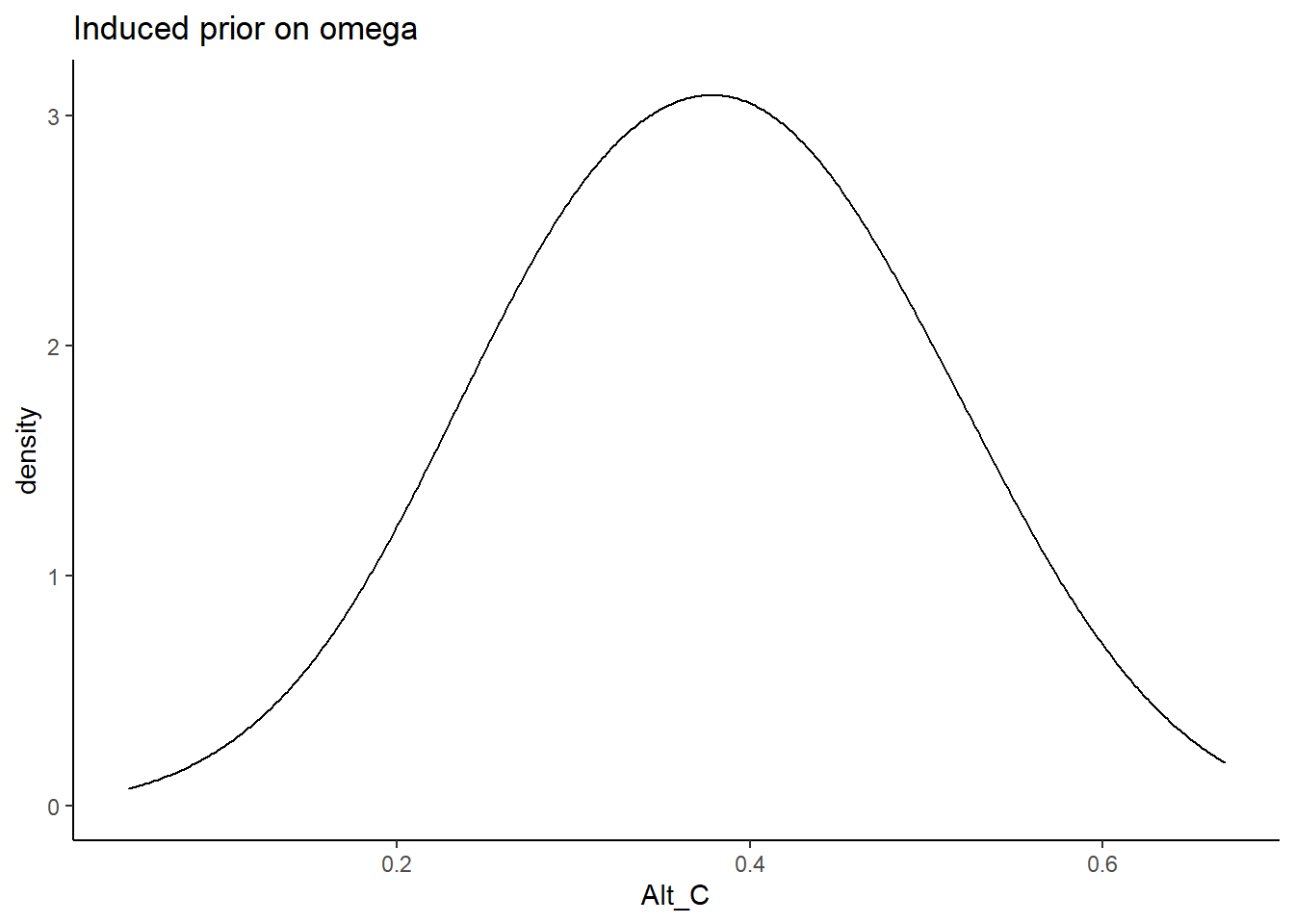

Alternative C

\[\lambda \sim N^+(0,.44) \Longrightarrow \lambda \sim N^+(0,10)\]

prior_lambda_C <- function(n){

y <- rep(-1, n)

for(i in 1:n){

while(y[i] < 0){

y[i] <- rnorm(1, 0, sqrt(1/10))

}

}

return(y)

}

sim_omega <- numeric(nsim)

for(i in 1:nsim){

lam_vec <- prior_lambda_C(10)

tht_vec <- rep(1, 10)

sim_omega[i] <- prior_omega(lam_vec, tht_vec)

}

prior_data$Alt_C <- sim_omega

ggplot(prior_data, aes(x=Alt_C))+

geom_density(adjust=2)+

labs(title="Induced prior on omega")+

theme_classic()

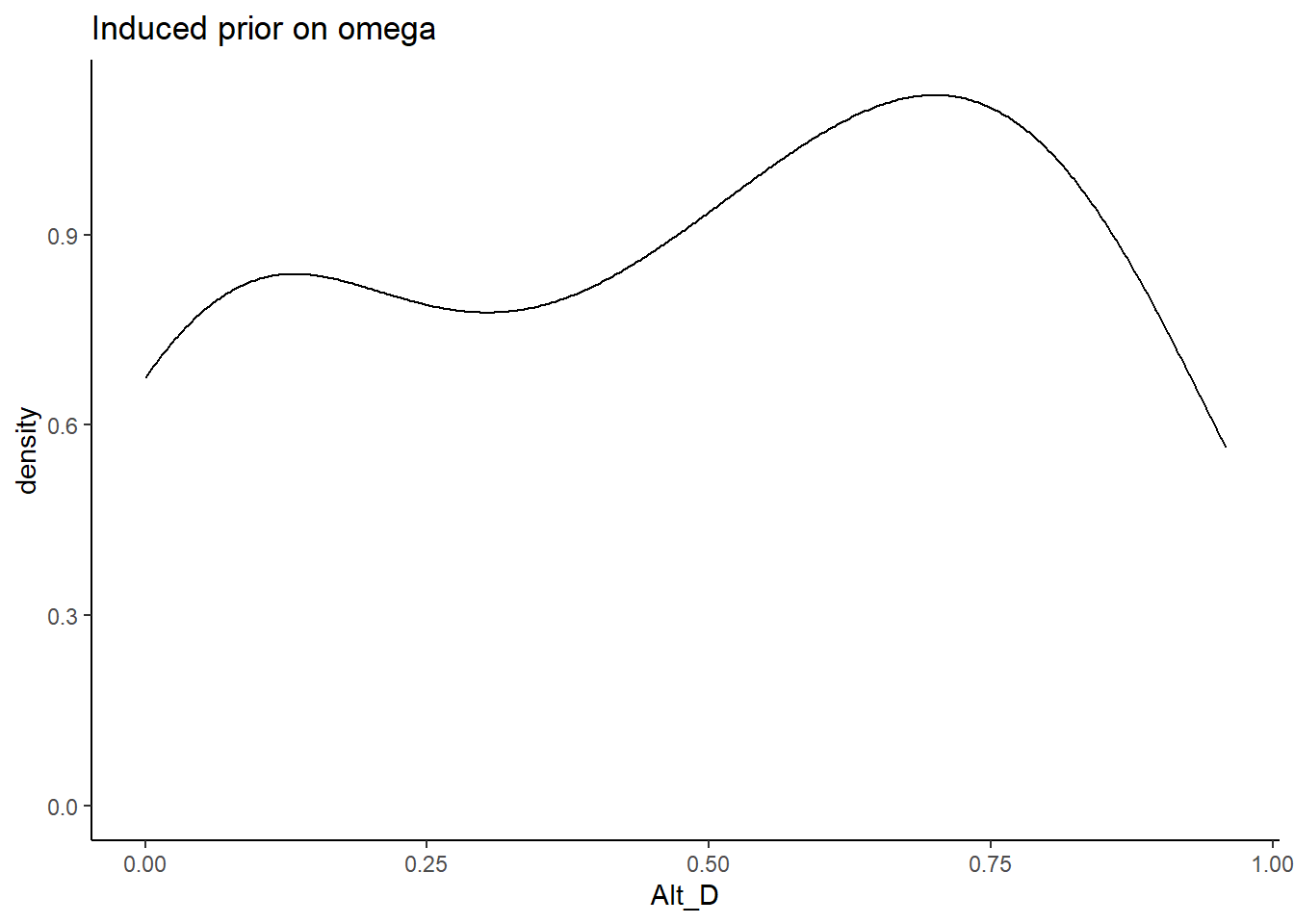

Alternative D

\[\lambda \sim N^+(0,.44) \Longrightarrow \lambda \sim N(0, 0.44)\]

prior_lambda_D <- function(n){

rnorm(n, 0, sqrt(1/0.44))

}

sim_omega <- numeric(nsim)

for(i in 1:nsim){

lam_vec <- prior_lambda_D(10)

tht_vec <- rep(1, 10)

sim_omega[i] <- prior_omega(lam_vec, tht_vec)

}

prior_data$Alt_D <- sim_omega

ggplot(prior_data, aes(x=Alt_D))+

geom_density(adjust=2)+

labs(title="Induced prior on omega")+

theme_classic()

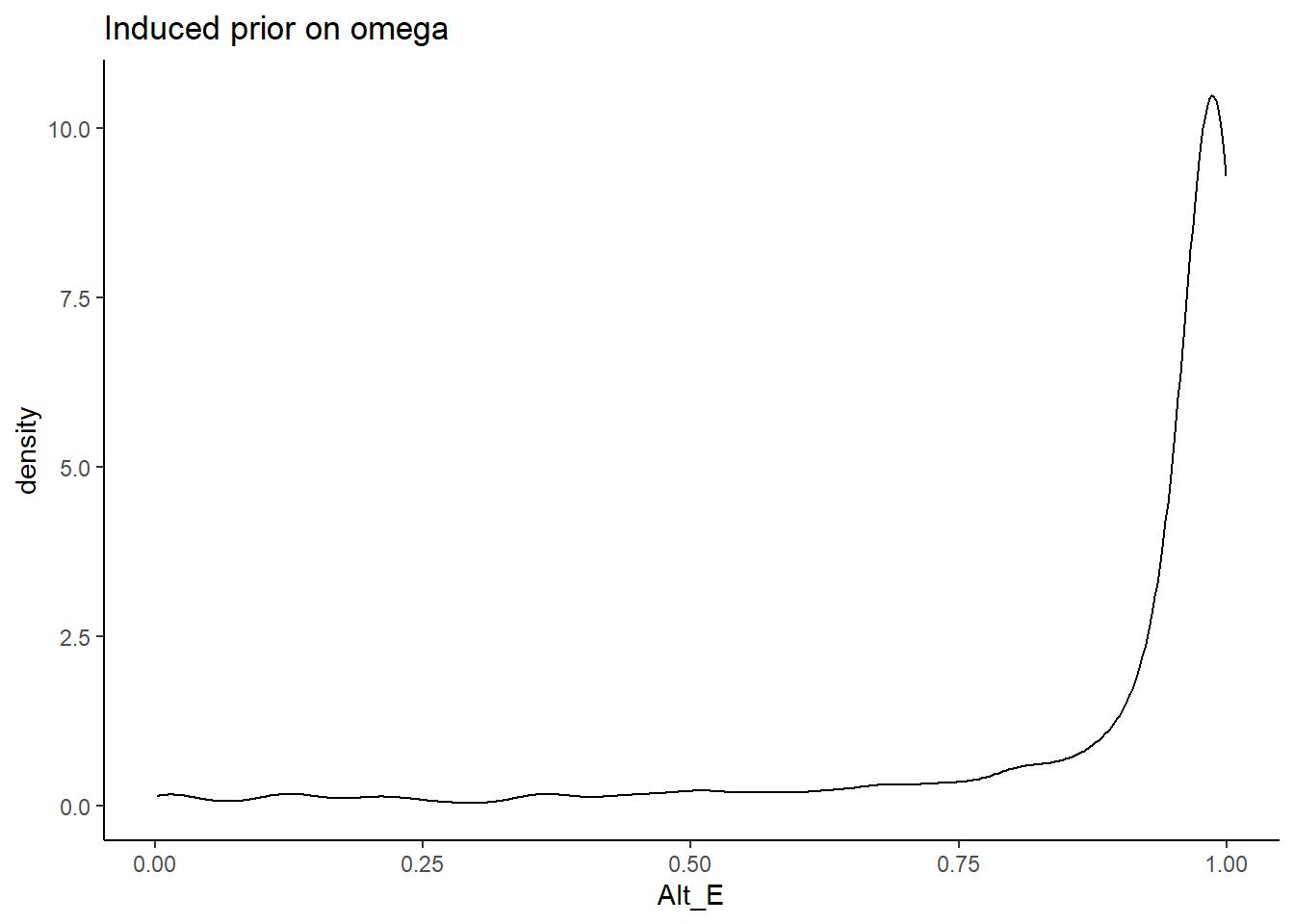

Alternative E

\[\lambda \sim N^+(0,.44) \Longrightarrow \lambda \sim N(0,0.01)\]

prior_lambda_E <- function(n){

rnorm(n, 0, sqrt(1/0.01))

}

sim_omega <- numeric(nsim)

for(i in 1:nsim){

lam_vec <- prior_lambda_E(10)

tht_vec <- rep(1, 10)

sim_omega[i] <- prior_omega(lam_vec, tht_vec)

}

prior_data$Alt_E <- sim_omega

ggplot(prior_data, aes(x=Alt_E))+

geom_density(adjust=2)+

labs(title="Induced prior on omega")+

theme_classic()

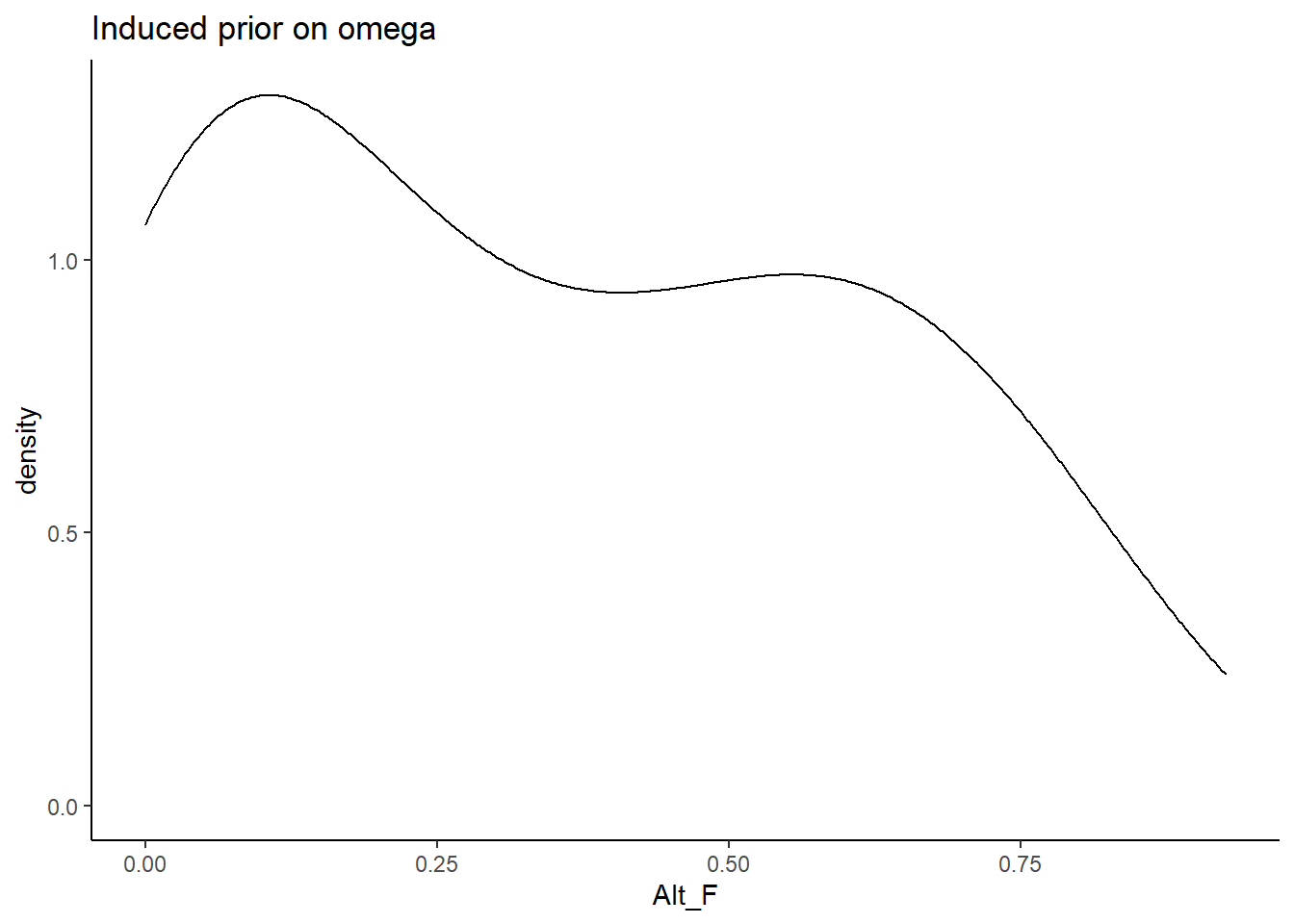

Alternative F

\[\lambda \sim N^+(0,.44) \Longrightarrow \lambda \sim N(0,1)\]

prior_lambda_F <- function(n){

rnorm(n, 0, 1)

}

sim_omega <- numeric(nsim)

for(i in 1:nsim){

lam_vec <- prior_lambda_F(10)

tht_vec <- rep(1, 10)

sim_omega[i] <- prior_omega(lam_vec, tht_vec)

}

prior_data$Alt_F <- sim_omega

ggplot(prior_data, aes(x=Alt_F))+

geom_density(adjust=2)+

labs(title="Induced prior on omega")+

theme_classic()

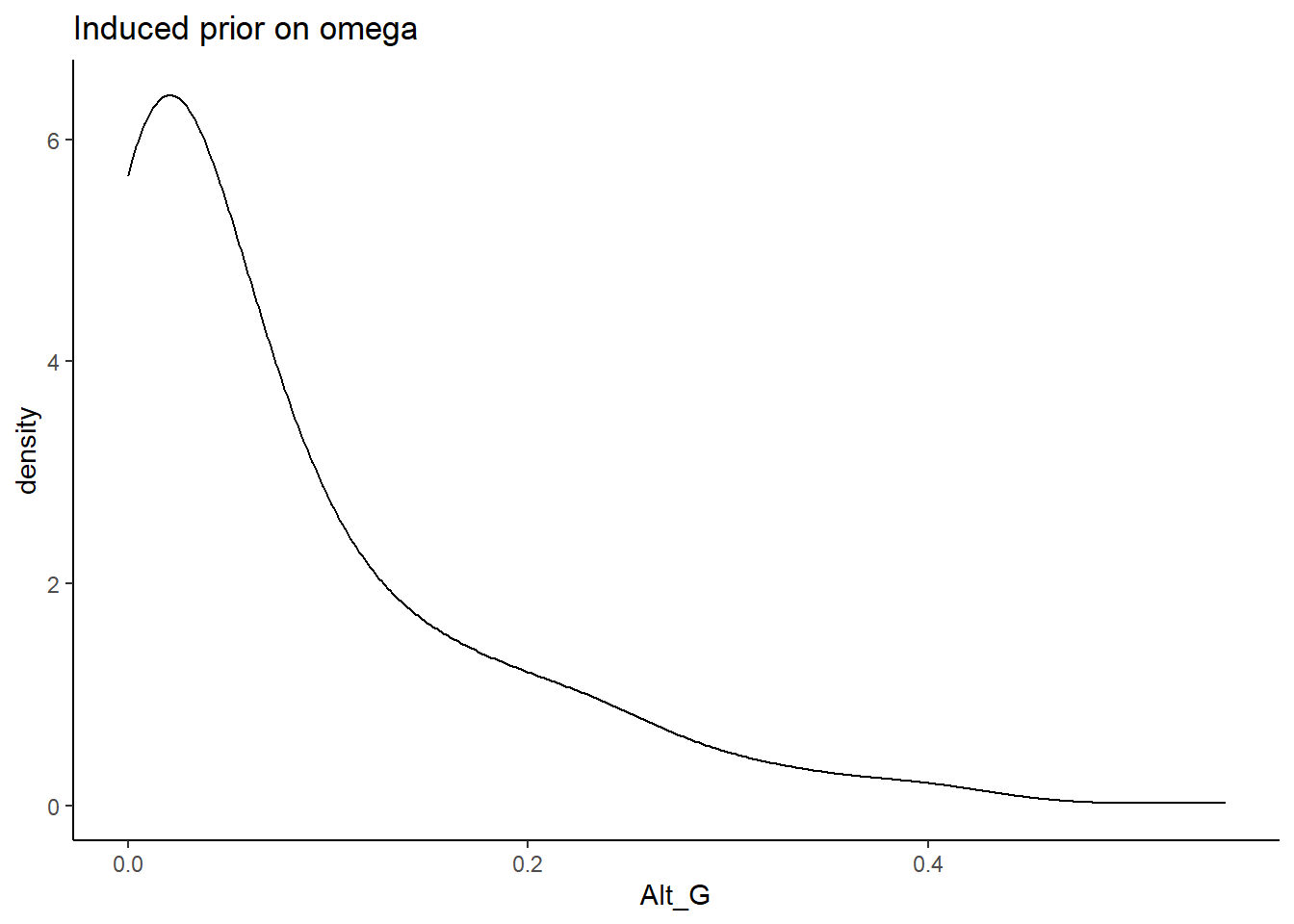

Alternative G

\[\lambda \sim N^+(0,.44) \Longrightarrow \lambda \sim N(0,10)\]

prior_lambda_G <- function(n){

rnorm(n, 0, sqrt(1/10))

}

sim_omega <- numeric(nsim)

for(i in 1:nsim){

lam_vec <- prior_lambda_G(10)

tht_vec <- rep(1, 10)

sim_omega[i] <- prior_omega(lam_vec, tht_vec)

}

prior_data$Alt_G <- sim_omega

ggplot(prior_data, aes(x=Alt_G))+

geom_density(adjust=2)+

labs(title="Induced prior on omega")+

theme_classic()

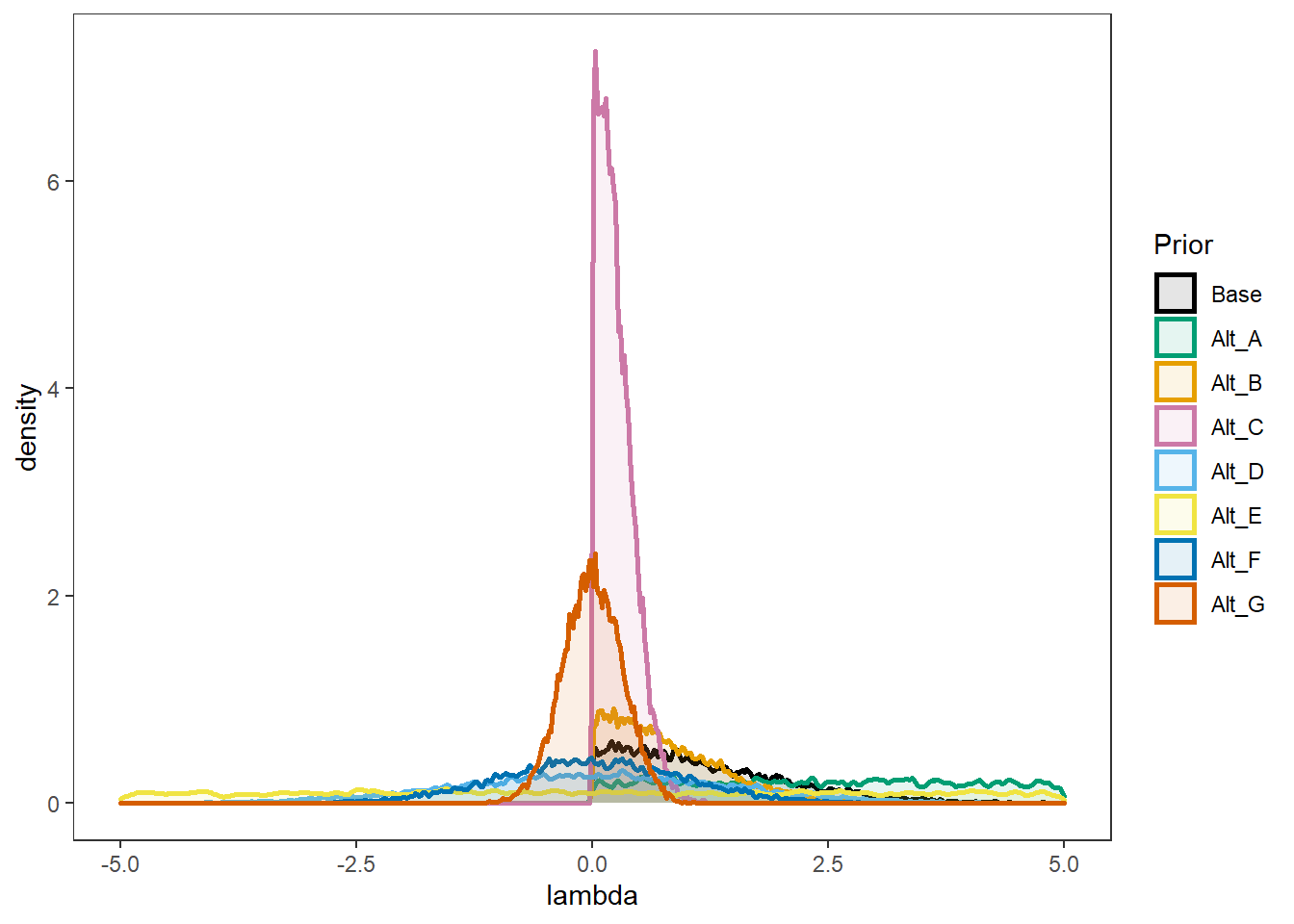

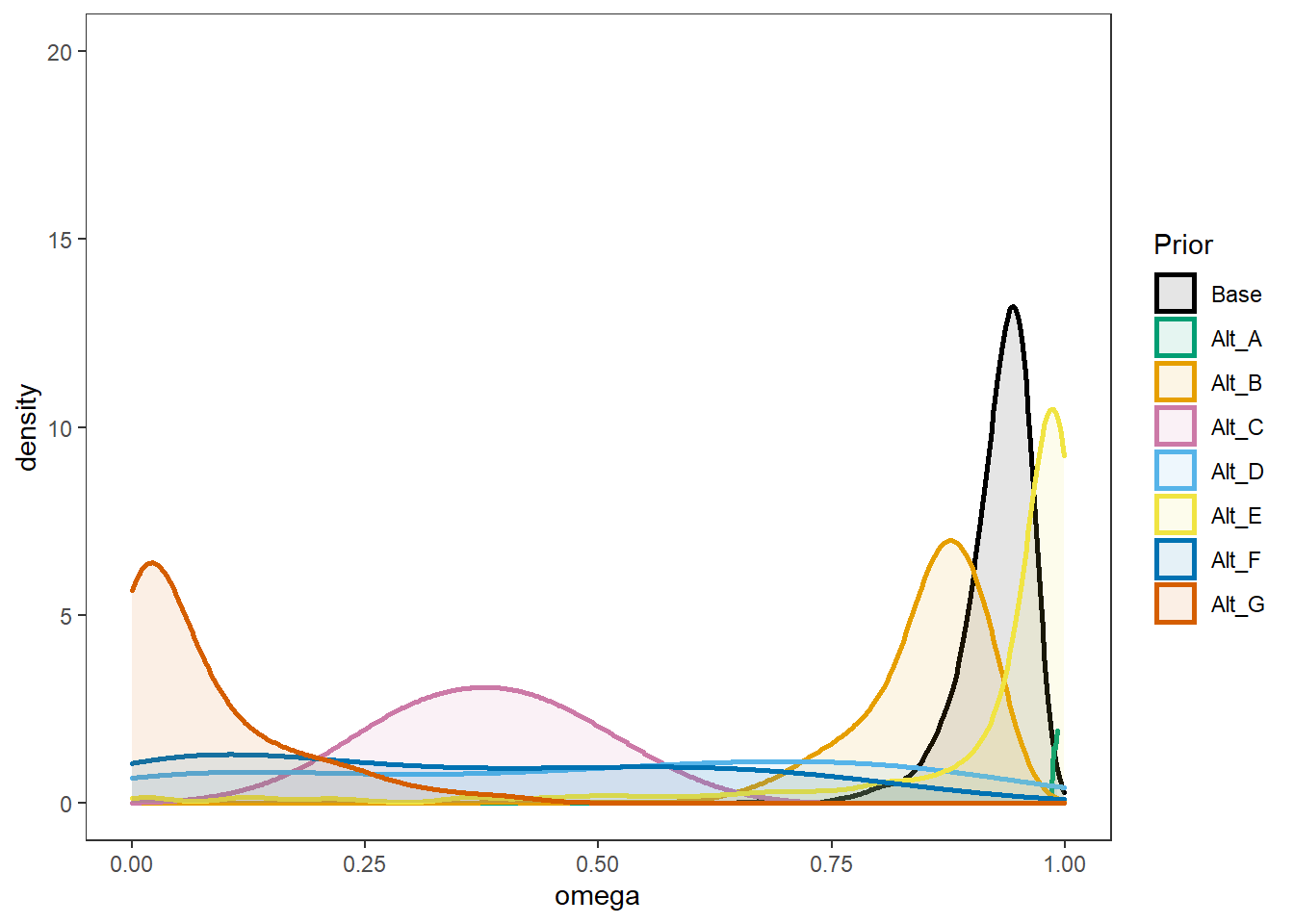

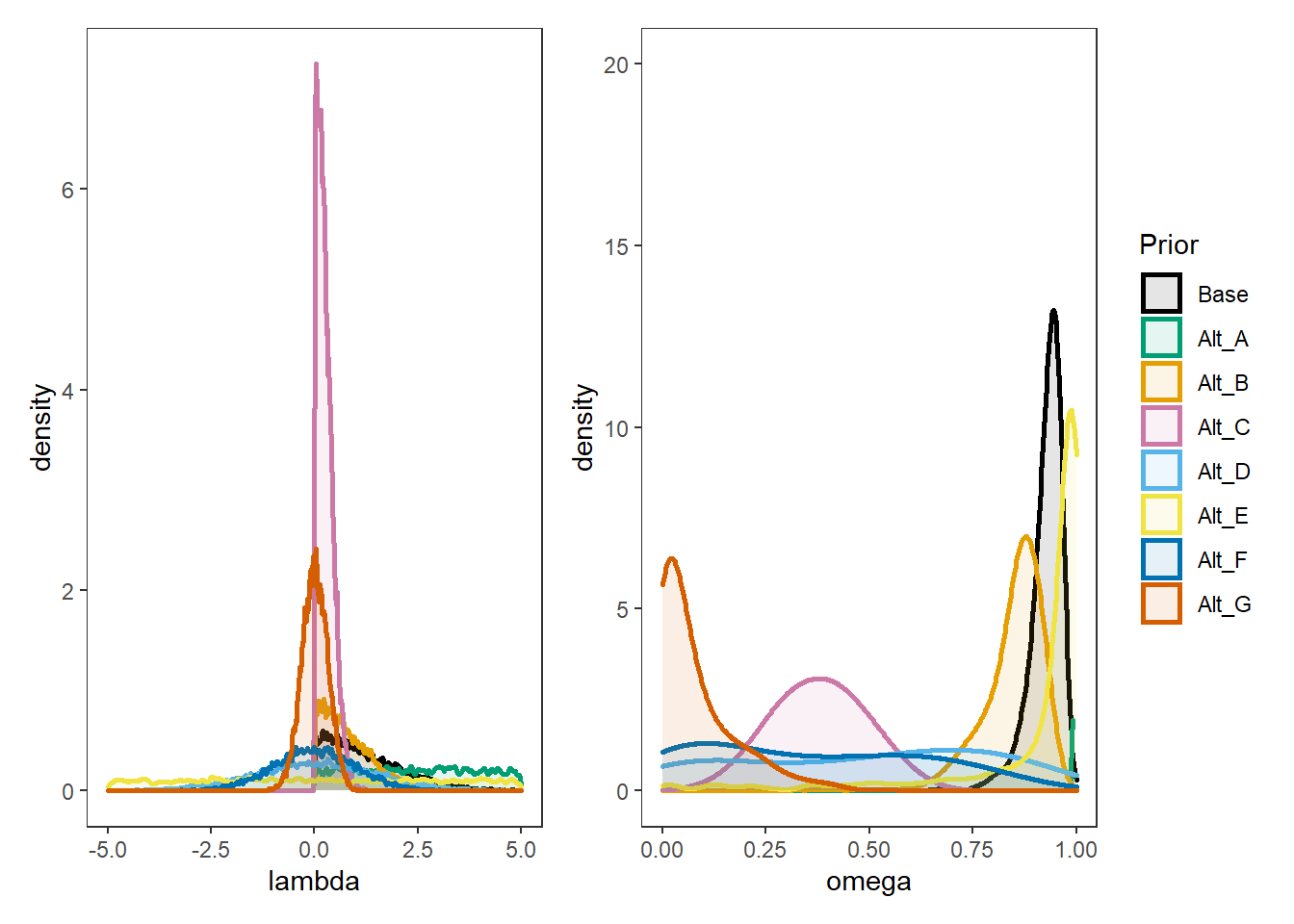

Comparing A-E

# lambda

prior_dat_lambda <- data.frame(

Base = prior_lambda(10000),

Alt_A = prior_lambda_A(10000),

Alt_B = prior_lambda_B(10000),

Alt_C = prior_lambda_C(10000),

Alt_D = prior_lambda_D(10000),

Alt_E = prior_lambda_E(10000),

Alt_F = prior_lambda_F(10000),

Alt_G = prior_lambda_G(10000)

)%>%

pivot_longer(

cols=everything(),

names_to="Prior",

values_to="lambda"

) %>%

mutate(

Prior = factor(Prior, levels=c("Base", "Alt_A", "Alt_B", "Alt_C", "Alt_D", "Alt_E", "Alt_F", "Alt_G"))

)

cols=c("Base"="black", "Alt_A"="#009e73", "Alt_B"="#E69F00", "Alt_C"="#CC79A7","Alt_D"="#56B4E9", "Alt_E"="#f0e442", "Alt_F"="#0072b2", "Alt_G"="#d55e00") #"#56B4E9", "#E69F00" "#CC79A7", "#d55e00", "#f0e442, " #0072b2"

p1 <- ggplot(prior_dat_lambda, aes(x=lambda, color=Prior, fill=Prior))+

geom_density(adjust=0.1, alpha=0.1, size=1)+

scale_color_manual(values=cols, name="Prior")+

scale_fill_manual(values=cols, name="Prior")+

lims(x=c(-5,5))+

theme_bw()+

theme(

panel.grid = element_blank()

)

p1Warning: Removed 12411 rows containing non-finite values (stat_density).

plot.prior <- prior_data %>%

pivot_longer(

cols=everything(),

names_to="Prior",

values_to="omega"

) %>%

mutate(

Prior = factor(Prior, levels=c("Base", "Alt_A", "Alt_B", "Alt_C", "Alt_D", "Alt_E", "Alt_F", "Alt_G"))

)

cols=c("Base"="black", "Alt_A"="#009e73", "Alt_B"="#E69F00", "Alt_C"="#CC79A7","Alt_D"="#56B4E9", "Alt_E"="#f0e442", "Alt_F"="#0072b2", "Alt_G"="#d55e00") #"#56B4E9", "#E69F00" "#CC79A7", "#d55e00", "#f0e442, " #0072b2"

p2 <- ggplot(plot.prior, aes(x=omega, color=Prior, fill=Prior))+

geom_density(adjust=2, alpha=0.1, size=1)+

scale_color_manual(values=cols, name="Prior")+

scale_fill_manual(values=cols, name="Prior")+

lims(y=c(0,20))+

theme_bw()+

theme(

panel.grid = element_blank()

)

p2

p1 + p2 + plot_layout(guides="collect")Warning: Removed 12411 rows containing non-finite values (stat_density).

Estimate Models

Base Priors

For the base model, the priors are

\[\lambda \sim N^+(0,.44)\] \[\xi = 1\]

# Save parameters

jags.params <- c("lambda.std",

"reli.omega",

"gamma[109,1,1,1]",

"gamma[109,1,1,2]",

"gamma[109,1,2,1]",

"gamma[109,1,2,2]",

"omega[109,1,2]",

"pi[109,1,2]",

"gamma[98,1,1,1]",

"gamma[98,1,1,2]",

"gamma[98,1,2,1]",

"gamma[98,1,2,2]",

"omega[98,1,2]",

"pi[98,1,2]")

# initial-values

jags.inits <- function(){

list(

"tau"=matrix(c(-0.64, -0.09, -1.05, -1.42, -0.11, -1.29, -1.59, -1.81, -0.93, -1.11), ncol=1, nrow=10),

"lambda"=rep(0.7,10),

"eta"=rnorm(142),

"speed"=rnorm(142),

"ystar"=matrix(c(0.7*rep(rnorm(142),10)), ncol=10),

"rho"=0.1,

"icept"=rep(0, 10),

"prec.s"=10,

"prec"=rep(4, 10),

"sigma.ts"=0.1

)

}

jags.data <- list(

y = mydata[,1:10],

lrt = mydata[,11:20],

N = nrow(mydata),

nit = 10,

ncat = 2,

xi = 1

)

# Run model

fit.base_prior <- R2jags::jags(

model = paste0(w.d, "/code/study_4/model_4w_xi.txt"),

parameters.to.save = jags.params,

inits = jags.inits,

data = jags.data,

n.chains = 4,

n.burnin = NBURN,

n.iter = NITER

)module glm loadedCompiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 2840

Unobserved stochastic nodes: 4587

Total graph size: 45400

Initializing modelBase \(\lambda\) Prior with Alt Tune A \(\xi = 0.1\)

\[\lambda \sim N^+(0,.44)\] \[\xi = 0.1\]

jags.data <- list(

y = mydata[,1:10],

lrt = mydata[,11:20],

N = nrow(mydata),

nit = 10,

ncat = 2,

xi = 0.1

)

# Run model

fit.base_alt_a <- R2jags::jags(

model = paste0(w.d, "/code/study_4/model_4w_xi.txt"),

parameters.to.save = jags.params,

inits = jags.inits,

data = jags.data,

n.chains = 4,

n.burnin = NBURN,

n.iter = NITER

)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 2840

Unobserved stochastic nodes: 4587

Total graph size: 45400

Initializing modelprint(fit.base_alt_a, width=1000)Inference for Bugs model at "C:/Users/noahp/Documents/GitHub/Padgett-Dissertation/code/study_4/model_4w_xi.txt", fit using jags,

4 chains, each with 10000 iterations (first 5000 discarded), n.thin = 5

n.sims = 4000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

gamma[109,1,1,1] 0.851 0.338 0.000 1.000 1.000 1.000 1.000 1.00 4000

gamma[109,1,1,2] 0.149 0.338 0.000 0.000 0.000 0.000 1.000 1.00 4000

gamma[109,1,2,1] 0.150 0.342 0.000 0.000 0.000 0.000 1.000 1.77 7

gamma[109,1,2,2] 0.850 0.342 0.000 1.000 1.000 1.000 1.000 1.25 30

gamma[98,1,1,1] 0.666 0.448 0.000 0.019 1.000 1.000 1.000 1.00 4000

gamma[98,1,1,2] 0.334 0.448 0.000 0.000 0.000 0.981 1.000 1.00 3200

gamma[98,1,2,1] 0.357 0.463 0.000 0.000 0.000 0.999 1.000 1.48 10

gamma[98,1,2,2] 0.643 0.463 0.000 0.001 1.000 1.000 1.000 1.98 7

lambda.std[1] 0.772 0.171 0.276 0.707 0.831 0.892 0.947 1.22 22

lambda.std[2] 0.850 0.111 0.554 0.815 0.880 0.925 0.967 1.22 22

lambda.std[3] 0.899 0.075 0.708 0.878 0.921 0.948 0.970 1.26 25

lambda.std[4] 0.576 0.252 0.038 0.401 0.630 0.785 0.916 1.14 30

lambda.std[5] 0.814 0.121 0.496 0.764 0.845 0.898 0.955 1.29 16

lambda.std[6] 0.505 0.246 0.039 0.308 0.534 0.721 0.879 1.00 560

lambda.std[7] 0.412 0.246 0.019 0.202 0.416 0.610 0.864 1.00 610

lambda.std[8] 0.485 0.252 0.030 0.278 0.510 0.700 0.872 1.04 72

lambda.std[9] 0.603 0.250 0.046 0.441 0.681 0.800 0.913 1.28 16

lambda.std[10] 0.761 0.202 0.146 0.703 0.836 0.900 0.946 1.34 17

omega[109,1,2] 0.224 0.248 0.000 0.014 0.120 0.382 0.824 1.31 19

omega[98,1,2] 0.780 0.235 0.193 0.646 0.868 0.976 1.000 1.01 530

pi[109,1,2] 0.272 0.302 0.000 0.013 0.141 0.476 0.963 1.31 18

pi[98,1,2] 0.603 0.364 0.000 0.248 0.718 0.954 1.000 1.40 14

reli.omega 0.938 0.021 0.886 0.927 0.943 0.955 0.967 1.23 17

deviance 2743.862 46.630 2659.313 2711.985 2742.954 2773.882 2840.681 1.09 36

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 994.6 and DIC = 3738.5

DIC is an estimate of expected predictive error (lower deviance is better).Base \(\lambda\) Prior with Alt Tune B \(\xi = 10\)

\[\lambda \sim N^+(0,.44)\] \[\xi = 10\]

jags.data <- list(

y = mydata[,1:10],

lrt = mydata[,11:20],

N = nrow(mydata),

nit = 10,

ncat = 2,

xi = 10

)

# Run model

fit.base_alt_b <- R2jags::jags(

model = paste0(w.d, "/code/study_4/model_4w_xi.txt"),

parameters.to.save = jags.params,

inits = jags.inits,

data = jags.data,

n.chains = 4,

n.burnin = NBURN,

n.iter = NITER

)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 2840

Unobserved stochastic nodes: 4587

Total graph size: 45400

Initializing modelprint(fit.base_alt_b, width=1000)Inference for Bugs model at "C:/Users/noahp/Documents/GitHub/Padgett-Dissertation/code/study_4/model_4w_xi.txt", fit using jags,

4 chains, each with 10000 iterations (first 5000 discarded), n.thin = 5

n.sims = 4000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

gamma[109,1,1,1] 0.852 0.105 0.590 0.795 0.873 0.932 0.987 1.00 4000

gamma[109,1,1,2] 0.148 0.105 0.013 0.068 0.127 0.205 0.410 1.00 4000

gamma[109,1,2,1] 0.142 0.103 0.011 0.062 0.118 0.198 0.397 1.00 3400

gamma[109,1,2,2] 0.858 0.103 0.603 0.802 0.882 0.938 0.989 1.01 550

gamma[98,1,1,1] 0.668 0.142 0.357 0.574 0.681 0.775 0.903 1.00 2600

gamma[98,1,1,2] 0.332 0.142 0.097 0.225 0.319 0.426 0.643 1.00 3600

gamma[98,1,2,1] 0.356 0.144 0.107 0.251 0.343 0.454 0.657 1.00 4000

gamma[98,1,2,2] 0.644 0.144 0.343 0.546 0.657 0.749 0.893 1.00 4000

lambda.std[1] 0.786 0.138 0.410 0.732 0.824 0.883 0.945 1.08 99

lambda.std[2] 0.852 0.119 0.527 0.809 0.889 0.935 0.976 1.03 200

lambda.std[3] 0.905 0.058 0.760 0.887 0.920 0.941 0.965 1.09 61

lambda.std[4] 0.711 0.226 0.114 0.601 0.793 0.881 0.946 1.09 46

lambda.std[5] 0.637 0.219 0.137 0.496 0.690 0.810 0.934 1.05 76

lambda.std[6] 0.476 0.243 0.034 0.281 0.497 0.681 0.872 1.03 88

lambda.std[7] 0.494 0.242 0.035 0.298 0.529 0.701 0.865 1.01 280

lambda.std[8] 0.430 0.246 0.021 0.217 0.434 0.644 0.833 1.00 560

lambda.std[9] 0.680 0.177 0.228 0.593 0.724 0.812 0.902 1.04 170

lambda.std[10] 0.887 0.072 0.699 0.863 0.904 0.935 0.967 1.06 64

omega[109,1,2] 0.268 0.187 0.024 0.123 0.224 0.371 0.718 1.01 410

omega[98,1,2] 0.468 0.142 0.188 0.371 0.478 0.553 0.756 1.00 1300

pi[109,1,2] 0.184 0.242 0.000 0.006 0.069 0.280 0.861 1.01 300

pi[98,1,2] 0.322 0.328 0.000 0.019 0.202 0.584 0.981 1.10 150

reli.omega 0.943 0.020 0.894 0.933 0.947 0.959 0.971 1.06 59

deviance 3572.185 33.965 3504.143 3549.780 3572.593 3594.369 3639.753 1.04 73

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 553.2 and DIC = 4125.3

DIC is an estimate of expected predictive error (lower deviance is better).Base \(\lambda\) Prior with Alt Tune C \(\xi U(0.5,1.5)\)

\[\lambda \sim N^+(0,.44)\] \[\xi \sim Uniform(0.5,1.5)\]

jags.params <- c("lambda.std",

"reli.omega",

"gamma[109,1,1,1]",

"gamma[109,1,1,2]",

"gamma[109,1,2,1]",

"gamma[109,1,2,2]",

"omega[109,1,2]",

"pi[109,1,2]",

"gamma[98,1,1,1]",

"gamma[98,1,1,2]",

"gamma[98,1,2,1]",

"gamma[98,1,2,2]",

"omega[98,1,2]",

"pi[98,1,2]",

"xi")

jags.data <- list(

y = mydata[,1:10],

lrt = mydata[,11:20],

N = nrow(mydata),

nit = 10,

ncat = 2

)

# Run model

fit.base_alt_c <- R2jags::jags(

model = paste0(w.d, "/code/study_4/model_4w_xi_uniform.txt"),

parameters.to.save = jags.params,

inits = jags.inits,

data = jags.data,

n.chains = 4,

n.burnin = NBURN,

n.iter = NITER

)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 2840

Unobserved stochastic nodes: 4588

Total graph size: 45401

Initializing modelprint(fit.base_alt_c, width=1000)Inference for Bugs model at "C:/Users/noahp/Documents/GitHub/Padgett-Dissertation/code/study_4/model_4w_xi_uniform.txt", fit using jags,

4 chains, each with 10000 iterations (first 5000 discarded), n.thin = 5

n.sims = 4000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

gamma[109,1,1,1] 0.850 0.230 0.159 0.793 0.972 0.999 1.000 1.00 2300

gamma[109,1,1,2] 0.150 0.230 0.000 0.001 0.028 0.207 0.841 1.00 3200

gamma[109,1,2,1] 0.104 0.175 0.000 0.001 0.019 0.125 0.655 1.01 1700

gamma[109,1,2,2] 0.896 0.175 0.345 0.875 0.981 0.999 1.000 1.03 770

gamma[98,1,1,1] 0.665 0.309 0.038 0.426 0.753 0.947 1.000 1.00 4000

gamma[98,1,1,2] 0.335 0.309 0.000 0.053 0.247 0.574 0.962 1.00 4000

gamma[98,1,2,1] 0.471 0.316 0.002 0.180 0.462 0.753 0.980 1.00 4000

gamma[98,1,2,2] 0.529 0.316 0.020 0.247 0.538 0.820 0.998 1.01 840

lambda.std[1] 0.820 0.110 0.527 0.774 0.848 0.896 0.950 1.09 52

lambda.std[2] 0.822 0.149 0.411 0.769 0.874 0.924 0.967 1.08 63

lambda.std[3] 0.920 0.046 0.795 0.898 0.932 0.952 0.974 1.07 58

lambda.std[4] 0.744 0.214 0.136 0.656 0.823 0.902 0.955 1.00 1100

lambda.std[5] 0.613 0.230 0.104 0.460 0.654 0.804 0.932 1.06 57

lambda.std[6] 0.505 0.243 0.040 0.312 0.530 0.711 0.877 1.03 120

lambda.std[7] 0.481 0.243 0.034 0.287 0.503 0.689 0.856 1.04 100

lambda.std[8] 0.446 0.259 0.021 0.219 0.445 0.672 0.871 1.02 110

lambda.std[9] 0.706 0.163 0.269 0.628 0.746 0.825 0.914 1.03 130

lambda.std[10] 0.884 0.066 0.706 0.859 0.900 0.929 0.959 1.08 77

omega[109,1,2] 0.216 0.212 0.000 0.031 0.141 0.360 0.719 1.01 270

omega[98,1,2] 0.580 0.224 0.133 0.430 0.568 0.759 0.968 1.00 4000

pi[109,1,2] 0.163 0.226 0.000 0.003 0.052 0.248 0.807 1.02 160

pi[98,1,2] 0.293 0.326 0.000 0.009 0.142 0.535 0.985 1.02 700

reli.omega 0.946 0.019 0.897 0.937 0.952 0.960 0.971 1.11 35

xi 1.379 0.091 1.159 1.323 1.399 1.454 1.495 1.03 170

deviance 3292.296 44.610 3205.152 3262.394 3291.263 3321.612 3383.116 1.01 200

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 980.5 and DIC = 4272.8

DIC is an estimate of expected predictive error (lower deviance is better).Base \(\lambda\) Prior with Alt Tune D \(\xi G(1,1)\)

\[\lambda \sim N^+(0,.44)\] \[\xi \sim Gamma(1,1)\]

jags.params <- c("lambda.std",

"reli.omega",

"gamma[109,1,1,1]",

"gamma[109,1,1,2]",

"gamma[109,1,2,1]",

"gamma[109,1,2,2]",

"omega[109,1,2]",

"pi[109,1,2]",

"gamma[98,1,1,1]",

"gamma[98,1,1,2]",

"gamma[98,1,2,1]",

"gamma[98,1,2,2]",

"omega[98,1,2]",

"pi[98,1,2]",

"xi")

jags.data <- list(

y = mydata[,1:10],

lrt = mydata[,11:20],

N = nrow(mydata),

nit = 10,

ncat = 2

)

# Run model

fit.base_alt_d <- R2jags::jags(

model = paste0(w.d, "/code/study_4/model_4w_xi_gamma.txt"),

parameters.to.save = jags.params,

inits = jags.inits,

data = jags.data,

n.chains = 4,

n.burnin = NBURN,

n.iter = NITER

)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 2840

Unobserved stochastic nodes: 4588

Total graph size: 45400

Initializing modelprint(fit.base_alt_d, width=1000)Inference for Bugs model at "C:/Users/noahp/Documents/GitHub/Padgett-Dissertation/code/study_4/model_4w_xi_gamma.txt", fit using jags,

4 chains, each with 10000 iterations (first 5000 discarded), n.thin = 5

n.sims = 4000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

gamma[109,1,1,1] 0.847 0.167 0.395 0.779 0.903 0.974 1.000 1.03 840

gamma[109,1,1,2] 0.153 0.167 0.000 0.026 0.097 0.221 0.605 1.07 84

gamma[109,1,2,1] 0.129 0.147 0.000 0.019 0.077 0.193 0.532 1.09 53

gamma[109,1,2,2] 0.871 0.147 0.468 0.807 0.923 0.981 1.000 1.02 820

gamma[98,1,1,1] 0.663 0.223 0.175 0.514 0.697 0.845 0.985 1.03 380

gamma[98,1,1,2] 0.337 0.223 0.015 0.155 0.303 0.486 0.825 1.05 220

gamma[98,1,2,1] 0.402 0.222 0.035 0.231 0.383 0.563 0.852 1.02 2200

gamma[98,1,2,2] 0.598 0.222 0.148 0.437 0.617 0.769 0.965 1.05 130

lambda.std[1] 0.818 0.123 0.496 0.766 0.851 0.905 0.956 1.05 360

lambda.std[2] 0.859 0.111 0.558 0.817 0.894 0.937 0.972 1.02 160

lambda.std[3] 0.912 0.063 0.756 0.892 0.927 0.951 0.975 1.12 180

lambda.std[4] 0.791 0.195 0.211 0.743 0.866 0.920 0.964 1.12 40

lambda.std[5] 0.642 0.215 0.132 0.508 0.676 0.810 0.942 1.05 79

lambda.std[6] 0.475 0.236 0.035 0.288 0.498 0.669 0.857 1.01 490

lambda.std[7] 0.435 0.243 0.020 0.232 0.443 0.632 0.866 1.02 160

lambda.std[8] 0.473 0.263 0.027 0.245 0.490 0.696 0.892 1.03 110

lambda.std[9] 0.713 0.160 0.305 0.633 0.745 0.827 0.940 1.07 68

lambda.std[10] 0.895 0.071 0.694 0.871 0.917 0.941 0.962 1.13 100

omega[109,1,2] 0.250 0.205 0.005 0.079 0.202 0.383 0.739 1.03 180

omega[98,1,2] 0.503 0.180 0.160 0.384 0.499 0.617 0.876 1.01 250

pi[109,1,2] 0.175 0.240 0.000 0.004 0.059 0.259 0.855 1.02 390

pi[98,1,2] 0.272 0.318 0.000 0.004 0.112 0.499 0.972 1.01 720

reli.omega 0.950 0.020 0.898 0.942 0.955 0.964 0.977 1.20 22

xi 4.243 2.109 2.062 2.701 3.331 5.562 9.129 2.64 5

deviance 3466.241 70.726 3330.781 3415.105 3464.109 3520.033 3596.189 1.80 7

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 1258.2 and DIC = 4724.4

DIC is an estimate of expected predictive error (lower deviance is better).Alt \(\lambda\) Prior A with Base Tune \(\xi = 1\)

\[\lambda \sim N^+(0,.01)\] \[\xi = 1\]

# Save parameters

jags.params <- c("lambda.std",

"reli.omega",

"gamma[109,1,1,1]",

"gamma[109,1,1,2]",

"gamma[109,1,2,1]",

"gamma[109,1,2,2]",

"omega[109,1,2]",

"pi[109,1,2]",

"gamma[98,1,1,1]",

"gamma[98,1,1,2]",

"gamma[98,1,2,1]",

"gamma[98,1,2,2]",

"omega[98,1,2]",

"pi[98,1,2]")

jags.data <- list(

y = mydata[,1:10],

lrt = mydata[,11:20],

N = nrow(mydata),

nit = 10,

ncat = 2,

xi = 1

)

# Run model

fit.alt_a_base <- R2jags::jags(

model = paste0(w.d, "/code/study_4/model_4Aw_xi.txt"),

parameters.to.save = jags.params,

inits = jags.inits,

data = jags.data,

n.chains = 4,

n.burnin = NBURN,

n.iter = NITER

)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 2840

Unobserved stochastic nodes: 4587

Total graph size: 45400

Initializing modelprint(fit.alt_a_base, width=1000)Inference for Bugs model at "C:/Users/noahp/Documents/GitHub/Padgett-Dissertation/code/study_4/model_4Aw_xi.txt", fit using jags,

4 chains, each with 10000 iterations (first 5000 discarded), n.thin = 5

n.sims = 4000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

gamma[109,1,1,1] 0.850 0.251 0.099 0.811 0.985 1.000 1.000 1.00 4000

gamma[109,1,1,2] 0.150 0.251 0.000 0.000 0.015 0.189 0.901 1.00 2600

gamma[109,1,2,1] 0.087 0.186 0.000 0.000 0.002 0.066 0.731 1.26 26

gamma[109,1,2,2] 0.913 0.186 0.269 0.934 0.998 1.000 1.000 1.05 1700

gamma[98,1,1,1] 0.673 0.327 0.017 0.407 0.797 0.973 1.000 1.00 4000

gamma[98,1,1,2] 0.327 0.327 0.000 0.027 0.203 0.593 0.983 1.00 3500

gamma[98,1,2,1] 0.557 0.327 0.002 0.262 0.606 0.863 0.994 1.02 280

gamma[98,1,2,2] 0.443 0.327 0.006 0.137 0.394 0.738 0.998 1.01 440

lambda.std[1] 0.928 0.090 0.658 0.917 0.958 0.976 0.990 1.30 37

lambda.std[2] 0.977 0.040 0.841 0.978 0.991 0.997 0.998 1.64 10

lambda.std[3] 0.952 0.044 0.841 0.944 0.965 0.977 0.989 1.38 17

lambda.std[4] 0.910 0.103 0.592 0.896 0.945 0.966 0.984 1.09 88

lambda.std[5] 0.852 0.153 0.437 0.782 0.908 0.969 0.990 1.40 12

lambda.std[6] 0.593 0.267 0.043 0.397 0.660 0.819 0.943 1.00 1300

lambda.std[7] 0.567 0.269 0.029 0.362 0.623 0.793 0.932 1.02 170

lambda.std[8] 0.574 0.278 0.033 0.349 0.629 0.819 0.944 1.09 40

lambda.std[9] 0.861 0.120 0.539 0.819 0.901 0.945 0.976 1.34 17

lambda.std[10] 0.936 0.054 0.794 0.920 0.953 0.971 0.986 1.22 29

omega[109,1,2] 0.153 0.202 0.000 0.003 0.056 0.245 0.700 1.14 87

omega[98,1,2] 0.623 0.259 0.101 0.431 0.641 0.852 0.992 1.00 4000

pi[109,1,2] 0.107 0.199 0.000 0.000 0.005 0.112 0.742 1.01 260

pi[98,1,2] 0.160 0.277 0.000 0.000 0.004 0.194 0.957 1.03 220

reli.omega 0.988 0.005 0.976 0.985 0.989 0.991 0.993 1.70 8

deviance 3138.831 46.429 3050.806 3107.466 3136.470 3169.707 3232.848 1.15 22

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 926.4 and DIC = 4065.2

DIC is an estimate of expected predictive error (lower deviance is better).Alt \(\lambda\) Prior A with Alt Tune A \(\xi = 0.1\)

\[\lambda \sim N^+(0,.01)\] \[\xi = 0.1\]

jags.data <- list(

y = mydata[,1:10],

lrt = mydata[,11:20],

N = nrow(mydata),

nit = 10,

ncat = 2,

xi = 0.1

)

# Run model

fit.alt_a_alt_a <- R2jags::jags(

model = paste0(w.d, "/code/study_4/model_4Aw_xi.txt"),

parameters.to.save = jags.params,

inits = jags.inits,

data = jags.data,

n.chains = 4,

n.burnin = NBURN,

n.iter = NITER

)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 2840

Unobserved stochastic nodes: 4587

Total graph size: 45400

Initializing modelprint(fit.alt_a_alt_a, width=1000)Inference for Bugs model at "C:/Users/noahp/Documents/GitHub/Padgett-Dissertation/code/study_4/model_4Aw_xi.txt", fit using jags,

4 chains, each with 10000 iterations (first 5000 discarded), n.thin = 5

n.sims = 4000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

gamma[109,1,1,1] 0.843 0.348 0.000 1.000 1.000 1.000 1.000 1.01 2100

gamma[109,1,1,2] 0.157 0.348 0.000 0.000 0.000 0.000 1.000 1.00 4000

gamma[109,1,2,1] 0.130 0.318 0.000 0.000 0.000 0.000 1.000 3.72 5

gamma[109,1,2,2] 0.870 0.318 0.000 1.000 1.000 1.000 1.000 1.49 15

gamma[98,1,1,1] 0.669 0.449 0.000 0.015 1.000 1.000 1.000 1.00 3800

gamma[98,1,1,2] 0.331 0.449 0.000 0.000 0.000 0.985 1.000 1.00 4000

gamma[98,1,2,1] 0.362 0.458 0.000 0.000 0.003 0.998 1.000 1.28 15

gamma[98,1,2,2] 0.638 0.458 0.000 0.002 0.997 1.000 1.000 1.21 24

lambda.std[1] 0.833 0.138 0.448 0.775 0.866 0.937 0.978 1.33 14

lambda.std[2] 0.968 0.033 0.877 0.960 0.978 0.990 0.995 1.25 17

lambda.std[3] 0.939 0.062 0.750 0.925 0.959 0.977 0.990 1.38 15

lambda.std[4] 0.553 0.264 0.037 0.356 0.588 0.776 0.935 1.10 34

lambda.std[5] 0.851 0.154 0.435 0.797 0.908 0.961 0.991 1.66 9

lambda.std[6] 0.650 0.256 0.068 0.483 0.725 0.863 0.948 1.07 57

lambda.std[7] 0.590 0.286 0.039 0.347 0.670 0.846 0.942 1.10 33

lambda.std[8] 0.611 0.280 0.041 0.397 0.690 0.857 0.945 1.11 32

lambda.std[9] 0.785 0.223 0.108 0.727 0.880 0.929 0.970 1.62 10

lambda.std[10] 0.853 0.185 0.248 0.832 0.926 0.962 0.990 1.24 23

omega[109,1,2] 0.291 0.275 0.000 0.034 0.212 0.506 0.883 1.12 37

omega[98,1,2] 0.759 0.245 0.162 0.597 0.840 0.972 1.000 1.02 180

pi[109,1,2] 0.332 0.312 0.000 0.036 0.246 0.581 0.985 1.11 43

pi[98,1,2] 0.618 0.353 0.001 0.305 0.725 0.954 1.000 1.11 81

reli.omega 0.974 0.019 0.924 0.969 0.980 0.987 0.991 1.89 7

deviance 2690.927 44.285 2608.441 2660.855 2689.680 2720.160 2780.776 1.20 17

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 803.5 and DIC = 3494.4

DIC is an estimate of expected predictive error (lower deviance is better).Alt \(\lambda\) Prior A with Alt Tune B \(\xi = 10\)

\[\lambda \sim N^+(0,.01)\] \[\xi = 10\]

jags.data <- list(

y = mydata[,1:10],

lrt = mydata[,11:20],

N = nrow(mydata),

nit = 10,

ncat = 2,

xi = 10

)

# Run model

fit.alt_a_alt_b <- R2jags::jags(

model = paste0(w.d, "/code/study_4/model_4Aw_xi.txt"),

parameters.to.save = jags.params,

inits = jags.inits,

data = jags.data,

n.chains = 4,

n.burnin = NBURN,

n.iter = NITER

)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 2840

Unobserved stochastic nodes: 4587

Total graph size: 45400

Initializing modelprint(fit.alt_a_alt_b, width=1000)Inference for Bugs model at "C:/Users/noahp/Documents/GitHub/Padgett-Dissertation/code/study_4/model_4Aw_xi.txt", fit using jags,

4 chains, each with 10000 iterations (first 5000 discarded), n.thin = 5

n.sims = 4000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

gamma[109,1,1,1] 0.849 0.108 0.576 0.793 0.872 0.931 0.988 1.00 2000

gamma[109,1,1,2] 0.151 0.108 0.012 0.069 0.128 0.207 0.424 1.00 1600

gamma[109,1,2,1] 0.141 0.103 0.012 0.061 0.116 0.199 0.396 1.00 1500

gamma[109,1,2,2] 0.859 0.103 0.604 0.801 0.884 0.939 0.988 1.00 2100

gamma[98,1,1,1] 0.668 0.141 0.373 0.576 0.678 0.773 0.907 1.00 4000

gamma[98,1,1,2] 0.332 0.141 0.093 0.227 0.322 0.424 0.627 1.00 4000

gamma[98,1,2,1] 0.368 0.143 0.121 0.260 0.364 0.466 0.660 1.00 2100

gamma[98,1,2,2] 0.632 0.143 0.340 0.534 0.636 0.740 0.879 1.00 3900

lambda.std[1] 0.867 0.145 0.462 0.832 0.920 0.959 0.979 1.13 220

lambda.std[2] 0.964 0.058 0.792 0.959 0.989 0.995 0.998 1.56 11

lambda.std[3] 0.962 0.034 0.865 0.952 0.974 0.983 0.994 1.20 18

lambda.std[4] 0.950 0.057 0.789 0.946 0.968 0.979 0.991 1.28 22

lambda.std[5] 0.833 0.167 0.360 0.775 0.890 0.953 0.982 1.19 30

lambda.std[6] 0.587 0.270 0.047 0.376 0.648 0.810 0.954 1.01 380

lambda.std[7] 0.566 0.264 0.035 0.366 0.628 0.792 0.918 1.01 340

lambda.std[8] 0.623 0.264 0.056 0.433 0.701 0.838 0.964 1.02 150

lambda.std[9] 0.811 0.148 0.409 0.751 0.858 0.916 0.960 1.16 49

lambda.std[10] 0.959 0.031 0.877 0.948 0.970 0.979 0.988 1.19 18

omega[109,1,2] 0.247 0.183 0.023 0.106 0.203 0.340 0.699 1.01 300

omega[98,1,2] 0.446 0.147 0.172 0.342 0.449 0.537 0.746 1.01 350

pi[109,1,2] 0.155 0.231 0.000 0.001 0.035 0.220 0.835 1.05 160

pi[98,1,2] 0.227 0.312 0.000 0.000 0.042 0.392 0.967 1.06 130

reli.omega 0.987 0.005 0.973 0.986 0.988 0.990 0.993 1.39 13

deviance 3544.108 32.549 3480.266 3521.372 3543.494 3565.674 3610.166 1.02 120

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 517.2 and DIC = 4061.3

DIC is an estimate of expected predictive error (lower deviance is better).Alt \(\lambda\) Prior A with Alt Tune C \(\xi U(0.5,1.5)\)

\[\lambda \sim N^+(0,.01)\] \[\xi \sim Uniform(0.5,1.5)\]

jags.params <- c("lambda.std",

"reli.omega",

"gamma[109,1,1,1]",

"gamma[109,1,1,2]",

"gamma[109,1,2,1]",

"gamma[109,1,2,2]",

"omega[109,1,2]",

"pi[109,1,2]",

"gamma[98,1,1,1]",

"gamma[98,1,1,2]",

"gamma[98,1,2,1]",

"gamma[98,1,2,2]",

"omega[98,1,2]",

"pi[98,1,2]",

"xi")

jags.data <- list(

y = mydata[,1:10],

lrt = mydata[,11:20],

N = nrow(mydata),

nit = 10,

ncat = 2

)

# Run model

fit.alt_a_alt_c <- R2jags::jags(

model = paste0(w.d, "/code/study_4/model_4Aw_xi_uniform.txt"),

parameters.to.save = jags.params,

inits = jags.inits,

data = jags.data,

n.chains = 4,

n.burnin = NBURN,

n.iter = NITER

)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 2840

Unobserved stochastic nodes: 4588

Total graph size: 45401

Initializing modelprint(fit.alt_a_alt_c, width=1000)Inference for Bugs model at "C:/Users/noahp/Documents/GitHub/Padgett-Dissertation/code/study_4/model_4Aw_xi_uniform.txt", fit using jags,

4 chains, each with 10000 iterations (first 5000 discarded), n.thin = 5

n.sims = 4000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

gamma[109,1,1,1] 0.850 0.239 0.144 0.798 0.980 1.000 1.000 1.00 4000

gamma[109,1,1,2] 0.150 0.239 0.000 0.000 0.020 0.202 0.856 1.00 960

gamma[109,1,2,1] 0.110 0.200 0.000 0.000 0.010 0.121 0.762 1.08 120

gamma[109,1,2,2] 0.890 0.200 0.238 0.879 0.990 1.000 1.000 1.04 530

gamma[98,1,1,1] 0.668 0.315 0.030 0.421 0.768 0.955 1.000 1.01 1200

gamma[98,1,1,2] 0.332 0.315 0.000 0.045 0.232 0.579 0.970 1.00 1300

gamma[98,1,2,1] 0.519 0.322 0.001 0.229 0.553 0.812 0.990 1.04 230

gamma[98,1,2,2] 0.481 0.322 0.010 0.188 0.447 0.771 0.999 1.01 1100

lambda.std[1] 0.924 0.076 0.712 0.906 0.952 0.970 0.985 1.19 33

lambda.std[2] 0.952 0.065 0.758 0.944 0.977 0.988 0.997 1.57 10

lambda.std[3] 0.965 0.032 0.876 0.959 0.978 0.984 0.992 1.07 77

lambda.std[4] 0.906 0.114 0.600 0.896 0.941 0.966 0.990 1.25 56

lambda.std[5] 0.869 0.163 0.430 0.793 0.958 0.987 0.994 1.53 9

lambda.std[6] 0.520 0.263 0.040 0.300 0.552 0.747 0.916 1.00 620

lambda.std[7] 0.602 0.275 0.043 0.381 0.685 0.829 0.952 1.03 120

lambda.std[8] 0.614 0.264 0.050 0.431 0.680 0.840 0.931 1.03 150

lambda.std[9] 0.862 0.128 0.499 0.816 0.904 0.953 0.981 1.02 200

lambda.std[10] 0.964 0.035 0.874 0.957 0.976 0.986 0.993 1.16 39

omega[109,1,2] 0.180 0.214 0.000 0.008 0.086 0.297 0.720 1.03 180

omega[98,1,2] 0.605 0.248 0.114 0.430 0.614 0.816 0.985 1.00 660

pi[109,1,2] 0.125 0.219 0.000 0.000 0.012 0.145 0.826 1.03 210

pi[98,1,2] 0.204 0.316 0.000 0.000 0.013 0.317 0.999 1.03 340

reli.omega 0.987 0.005 0.975 0.984 0.989 0.992 0.994 1.67 8

xi 1.233 0.187 0.905 1.065 1.254 1.410 1.491 1.37 11

deviance 3195.307 68.226 3064.667 3145.080 3197.699 3247.247 3322.010 1.68 8

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 1281.0 and DIC = 4476.3

DIC is an estimate of expected predictive error (lower deviance is better).Alt \(\lambda\) Prior A with Alt Tune D \(\xi G(1,1)\)

\[\lambda \sim N^+(0,.01)\] \[\xi \sim Gamma(1,1)\]

jags.params <- c("lambda.std",

"reli.omega",

"gamma[109,1,1,1]",

"gamma[109,1,1,2]",

"gamma[109,1,2,1]",

"gamma[109,1,2,2]",

"omega[109,1,2]",

"pi[109,1,2]",

"gamma[98,1,1,1]",

"gamma[98,1,1,2]",

"gamma[98,1,2,1]",

"gamma[98,1,2,2]",

"omega[98,1,2]",

"pi[98,1,2]",

"xi")

jags.data <- list(

y = mydata[,1:10],

lrt = mydata[,11:20],

N = nrow(mydata),

nit = 10,

ncat = 2

)

# Run model

fit.alt_a_alt_d <- R2jags::jags(

model = paste0(w.d, "/code/study_4/model_4Aw_xi_gamma.txt"),

parameters.to.save = jags.params,

inits = jags.inits,

data = jags.data,

n.chains = 4,

n.burnin = NBURN,

n.iter = NITER

)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 2840

Unobserved stochastic nodes: 4588

Total graph size: 45400

Initializing modelprint(fit.alt_a_alt_d, width=1000)Inference for Bugs model at "C:/Users/noahp/Documents/GitHub/Padgett-Dissertation/code/study_4/model_4Aw_xi_gamma.txt", fit using jags,

4 chains, each with 10000 iterations (first 5000 discarded), n.thin = 5

n.sims = 4000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

gamma[109,1,1,1] 0.851 0.167 0.394 0.776 0.911 0.980 1.000 1.01 4000

gamma[109,1,1,2] 0.149 0.167 0.000 0.020 0.089 0.224 0.606 1.04 160

gamma[109,1,2,1] 0.120 0.143 0.000 0.015 0.069 0.171 0.514 1.08 57

gamma[109,1,2,2] 0.880 0.143 0.486 0.829 0.931 0.985 1.000 1.02 600

gamma[98,1,1,1] 0.673 0.224 0.187 0.523 0.712 0.856 0.984 1.01 3200

gamma[98,1,1,2] 0.327 0.224 0.016 0.144 0.288 0.477 0.813 1.02 360

gamma[98,1,2,1] 0.423 0.233 0.030 0.235 0.409 0.596 0.871 1.08 100

gamma[98,1,2,2] 0.577 0.233 0.129 0.404 0.591 0.765 0.970 1.02 290

lambda.std[1] 0.910 0.107 0.601 0.895 0.947 0.974 0.989 1.47 14

lambda.std[2] 0.981 0.025 0.920 0.976 0.988 0.996 0.998 1.40 15

lambda.std[3] 0.949 0.039 0.841 0.940 0.959 0.973 0.988 1.24 23

lambda.std[4] 0.917 0.117 0.558 0.905 0.961 0.981 0.991 1.34 24

lambda.std[5] 0.848 0.165 0.350 0.805 0.903 0.961 0.986 1.28 22

lambda.std[6] 0.573 0.267 0.039 0.371 0.624 0.803 0.934 1.03 150

lambda.std[7] 0.582 0.262 0.041 0.391 0.630 0.800 0.962 1.03 120

lambda.std[8] 0.559 0.265 0.038 0.345 0.608 0.790 0.924 1.03 120

lambda.std[9] 0.858 0.116 0.557 0.819 0.890 0.932 0.986 1.18 47

lambda.std[10] 0.945 0.043 0.853 0.932 0.956 0.970 0.987 1.13 160

omega[109,1,2] 0.207 0.194 0.002 0.051 0.149 0.314 0.697 1.05 83

omega[98,1,2] 0.490 0.200 0.118 0.350 0.493 0.626 0.875 1.01 230

pi[109,1,2] 0.125 0.215 0.000 0.000 0.013 0.154 0.797 1.14 37

pi[98,1,2] 0.169 0.280 0.000 0.000 0.007 0.228 0.937 1.12 42

reli.omega 0.987 0.006 0.972 0.983 0.988 0.991 0.994 1.68 8

xi 3.792 1.371 2.161 2.712 3.346 4.609 6.947 2.06 6

deviance 3418.624 61.302 3300.729 3374.260 3416.884 3462.215 3539.272 1.36 11

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 1325.5 and DIC = 4744.1

DIC is an estimate of expected predictive error (lower deviance is better).Alt \(\lambda\) Prior B with Base Tune \(\xi = 1\)

\[\lambda \sim N^+(0,5)\] \[\xi = 1\]

# Save parameters

jags.params <- c("lambda.std",

"reli.omega",

"gamma[109,1,1,1]",

"gamma[109,1,1,2]",

"gamma[109,1,2,1]",

"gamma[109,1,2,2]",

"omega[109,1,2]",

"pi[109,1,2]",

"gamma[98,1,1,1]",

"gamma[98,1,1,2]",

"gamma[98,1,2,1]",

"gamma[98,1,2,2]",

"omega[98,1,2]",

"pi[98,1,2]")

jags.data <- list(

y = mydata[,1:10],

lrt = mydata[,11:20],

N = nrow(mydata),

nit = 10,

ncat = 2,

xi = 1

)

# Run model

fit.alt_b_base <- R2jags::jags(

model = paste0(w.d, "/code/study_4/model_4Bw_xi.txt"),

parameters.to.save = jags.params,

inits = jags.inits,

data = jags.data,

n.chains = 4,

n.burnin = NBURN,

n.iter = NITER

)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 2840

Unobserved stochastic nodes: 4587

Total graph size: 45399

Initializing modelprint(fit.alt_b_base, width=1000)Inference for Bugs model at "C:/Users/noahp/Documents/GitHub/Padgett-Dissertation/code/study_4/model_4Bw_xi.txt", fit using jags,

4 chains, each with 10000 iterations (first 5000 discarded), n.thin = 5

n.sims = 4000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

gamma[109,1,1,1] 0.850 0.252 0.112 0.815 0.988 1.000 1.000 1.00 4000

gamma[109,1,1,2] 0.150 0.252 0.000 0.000 0.012 0.185 0.888 1.00 4000

gamma[109,1,2,1] 0.122 0.223 0.000 0.000 0.009 0.122 0.819 1.05 130

gamma[109,1,2,2] 0.878 0.223 0.181 0.878 0.991 1.000 1.000 1.02 880

gamma[98,1,1,1] 0.669 0.329 0.017 0.403 0.778 0.973 1.000 1.00 3800

gamma[98,1,1,2] 0.331 0.329 0.000 0.027 0.222 0.597 0.983 1.00 1200

gamma[98,1,2,1] 0.419 0.354 0.000 0.056 0.366 0.768 0.990 1.03 170

gamma[98,1,2,2] 0.581 0.354 0.010 0.232 0.634 0.944 1.000 1.01 440

lambda.std[1] 0.736 0.154 0.321 0.666 0.777 0.845 0.918 1.07 89

lambda.std[2] 0.754 0.144 0.404 0.680 0.788 0.862 0.933 1.02 280

lambda.std[3] 0.852 0.098 0.583 0.828 0.877 0.909 0.947 1.13 100

lambda.std[4] 0.587 0.258 0.042 0.395 0.655 0.800 0.917 1.05 75

lambda.std[5] 0.492 0.230 0.055 0.316 0.510 0.675 0.881 1.02 210

lambda.std[6] 0.433 0.234 0.025 0.244 0.439 0.625 0.837 1.01 270

lambda.std[7] 0.415 0.231 0.020 0.215 0.433 0.603 0.808 1.02 170

lambda.std[8] 0.317 0.212 0.012 0.136 0.294 0.476 0.758 1.01 370

lambda.std[9] 0.615 0.196 0.134 0.500 0.667 0.763 0.880 1.02 340

lambda.std[10] 0.820 0.096 0.577 0.786 0.842 0.883 0.930 1.09 73

omega[109,1,2] 0.253 0.229 0.000 0.049 0.192 0.419 0.777 1.01 480

omega[98,1,2] 0.612 0.230 0.139 0.457 0.610 0.808 0.978 1.00 4000

pi[109,1,2] 0.224 0.261 0.000 0.014 0.113 0.360 0.906 1.01 460

pi[98,1,2] 0.388 0.344 0.000 0.044 0.314 0.706 0.989 1.03 160

reli.omega 0.903 0.030 0.833 0.885 0.908 0.924 0.948 1.03 100

deviance 3241.202 43.606 3155.809 3210.705 3241.425 3270.884 3327.043 1.01 200

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 937.0 and DIC = 4178.2

DIC is an estimate of expected predictive error (lower deviance is better).Alt \(\lambda\) Prior B with Alt Tune A \(\xi = 0.1\)

\[\lambda \sim N^+(0,5)\] \[\xi = 0.1\]

jags.data <- list(

y = mydata[,1:10],

lrt = mydata[,11:20],

N = nrow(mydata),

nit = 10,

ncat = 2,

xi = 0.1

)

# Run model

fit.alt_b_alt_a <- R2jags::jags(

model = paste0(w.d, "/code/study_4/model_4Bw_xi.txt"),

parameters.to.save = jags.params,

inits = jags.inits,

data = jags.data,

n.chains = 4,

n.burnin = NBURN,

n.iter = NITER

)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 2840

Unobserved stochastic nodes: 4587

Total graph size: 45399

Initializing modelprint(fit.alt_b_alt_a, width=1000)Inference for Bugs model at "C:/Users/noahp/Documents/GitHub/Padgett-Dissertation/code/study_4/model_4Bw_xi.txt", fit using jags,

4 chains, each with 10000 iterations (first 5000 discarded), n.thin = 5

n.sims = 4000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

gamma[109,1,1,1] 0.844 0.345 0.000 1.000 1.000 1.000 1.000 1.00 2700

gamma[109,1,1,2] 0.156 0.345 0.000 0.000 0.000 0.000 1.000 1.00 3300

gamma[109,1,2,1] 0.062 0.222 0.000 0.000 0.000 0.000 0.997 1.55 9

gamma[109,1,2,2] 0.938 0.222 0.003 1.000 1.000 1.000 1.000 1.12 150

gamma[98,1,1,1] 0.666 0.451 0.000 0.012 1.000 1.000 1.000 1.00 3200

gamma[98,1,1,2] 0.334 0.451 0.000 0.000 0.000 0.988 1.000 1.00 3200

gamma[98,1,2,1] 0.409 0.464 0.000 0.000 0.034 0.999 1.000 1.09 39

gamma[98,1,2,2] 0.591 0.464 0.000 0.001 0.966 1.000 1.000 1.07 90

lambda.std[1] 0.732 0.148 0.365 0.652 0.766 0.845 0.921 1.12 32

lambda.std[2] 0.833 0.088 0.623 0.790 0.851 0.897 0.945 1.05 71

lambda.std[3] 0.720 0.194 0.156 0.643 0.779 0.859 0.928 1.12 38

lambda.std[4] 0.455 0.259 0.027 0.228 0.466 0.684 0.871 1.18 19

lambda.std[5] 0.763 0.159 0.313 0.695 0.805 0.877 0.942 1.19 36

lambda.std[6] 0.445 0.231 0.025 0.256 0.463 0.637 0.830 1.01 230

lambda.std[7] 0.392 0.241 0.018 0.181 0.385 0.588 0.824 1.02 170

lambda.std[8] 0.435 0.242 0.026 0.228 0.440 0.639 0.843 1.11 29

lambda.std[9] 0.502 0.273 0.025 0.270 0.527 0.743 0.909 1.20 17

lambda.std[10] 0.730 0.161 0.282 0.659 0.773 0.849 0.910 1.14 57

omega[109,1,2] 0.292 0.260 0.000 0.060 0.228 0.477 0.861 1.03 140

omega[98,1,2] 0.731 0.244 0.169 0.553 0.799 0.948 1.000 1.02 210

pi[109,1,2] 0.315 0.284 0.000 0.060 0.241 0.518 0.940 1.03 120

pi[98,1,2] 0.541 0.354 0.000 0.188 0.586 0.886 1.000 1.20 110

reli.omega 0.901 0.031 0.826 0.886 0.907 0.923 0.945 1.21 18

deviance 2754.377 47.736 2662.419 2721.675 2754.949 2786.523 2847.835 1.04 78

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 1096.0 and DIC = 3850.3

DIC is an estimate of expected predictive error (lower deviance is better).Alt \(\lambda\) Prior B with Alt Tune B \(\xi = 10\)

\[\lambda \sim N^+(0,5)\] \[\xi = 10\]

jags.data <- list(

y = mydata[,1:10],

lrt = mydata[,11:20],

N = nrow(mydata),

nit = 10,

ncat = 2,

xi = 10

)

# Run model

fit.alt_b_alt_b <- R2jags::jags(

model = paste0(w.d, "/code/study_4/model_4Bw_xi.txt"),

parameters.to.save = jags.params,

inits = jags.inits,

data = jags.data,

n.chains = 4,

n.burnin = NBURN,

n.iter = NITER

)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 2840

Unobserved stochastic nodes: 4587

Total graph size: 45399

Initializing modelprint(fit.alt_b_alt_b, width=1000)Inference for Bugs model at "C:/Users/noahp/Documents/GitHub/Padgett-Dissertation/code/study_4/model_4Bw_xi.txt", fit using jags,

4 chains, each with 10000 iterations (first 5000 discarded), n.thin = 5

n.sims = 4000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

gamma[109,1,1,1] 0.850 0.107 0.586 0.792 0.874 0.933 0.988 1.00 3100

gamma[109,1,1,2] 0.150 0.107 0.012 0.067 0.126 0.208 0.414 1.00 4000

gamma[109,1,2,1] 0.141 0.101 0.013 0.064 0.116 0.198 0.394 1.00 4000

gamma[109,1,2,2] 0.859 0.101 0.606 0.802 0.884 0.936 0.987 1.00 4000

gamma[98,1,1,1] 0.670 0.140 0.372 0.576 0.680 0.776 0.907 1.00 4000

gamma[98,1,1,2] 0.330 0.140 0.093 0.224 0.320 0.424 0.628 1.00 4000

gamma[98,1,2,1] 0.352 0.142 0.109 0.243 0.343 0.448 0.645 1.00 1800

gamma[98,1,2,2] 0.648 0.142 0.355 0.552 0.657 0.757 0.891 1.00 1400

lambda.std[1] 0.742 0.156 0.336 0.678 0.779 0.851 0.937 1.06 660

lambda.std[2] 0.774 0.139 0.411 0.717 0.813 0.872 0.935 1.03 310

lambda.std[3] 0.869 0.067 0.696 0.846 0.885 0.914 0.944 1.03 2700

lambda.std[4] 0.653 0.233 0.091 0.518 0.723 0.838 0.926 1.07 54

lambda.std[5] 0.529 0.228 0.065 0.356 0.554 0.717 0.881 1.01 610

lambda.std[6] 0.432 0.231 0.026 0.242 0.439 0.620 0.822 1.01 230

lambda.std[7] 0.407 0.226 0.022 0.215 0.406 0.598 0.803 1.01 460

lambda.std[8] 0.368 0.227 0.015 0.175 0.356 0.549 0.790 1.00 840

lambda.std[9] 0.664 0.176 0.211 0.575 0.705 0.793 0.893 1.04 150

lambda.std[10] 0.844 0.064 0.681 0.813 0.857 0.889 0.933 1.00 830

omega[109,1,2] 0.300 0.206 0.028 0.137 0.249 0.429 0.778 1.00 3300

omega[98,1,2] 0.478 0.143 0.200 0.383 0.483 0.561 0.777 1.00 830

pi[109,1,2] 0.231 0.272 0.000 0.012 0.110 0.376 0.917 1.01 1600

pi[98,1,2] 0.360 0.337 0.000 0.030 0.266 0.666 0.982 1.01 420

reli.omega 0.914 0.028 0.848 0.898 0.918 0.934 0.955 1.01 680

deviance 3581.821 34.496 3514.075 3559.455 3581.231 3604.761 3650.483 1.01 410

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 591.1 and DIC = 4172.9

DIC is an estimate of expected predictive error (lower deviance is better).Alt \(\lambda\) Prior B with Alt Tune C \(\xi U(0.5,1.5)\)

\[\lambda \sim N^+(0,5)\] \[\xi \sim Uniform(0.5,1.5)\]

jags.params <- c("lambda.std",

"reli.omega",

"gamma[109,1,1,1]",

"gamma[109,1,1,2]",

"gamma[109,1,2,1]",

"gamma[109,1,2,2]",

"omega[109,1,2]",

"pi[109,1,2]",

"gamma[98,1,1,1]",

"gamma[98,1,1,2]",

"gamma[98,1,2,1]",

"gamma[98,1,2,2]",

"omega[98,1,2]",

"pi[98,1,2]",

"xi")

jags.data <- list(

y = mydata[,1:10],

lrt = mydata[,11:20],

N = nrow(mydata),

nit = 10,

ncat = 2

)

# Run model

fit.alt_b_alt_c <- R2jags::jags(

model = paste0(w.d, "/code/study_4/model_4Bw_xi_uniform.txt"),

parameters.to.save = jags.params,

inits = jags.inits,

data = jags.data,

n.chains = 4,

n.burnin = NBURN,

n.iter = NITER

)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 2840

Unobserved stochastic nodes: 4588

Total graph size: 45400

Initializing modelprint(fit.alt_b_alt_c, width=1000)Inference for Bugs model at "C:/Users/noahp/Documents/GitHub/Padgett-Dissertation/code/study_4/model_4Bw_xi_uniform.txt", fit using jags,

4 chains, each with 10000 iterations (first 5000 discarded), n.thin = 5

n.sims = 4000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

gamma[109,1,1,1] 0.847 0.243 0.124 0.801 0.976 0.999 1.000 1.01 1500

gamma[109,1,1,2] 0.153 0.243 0.000 0.001 0.024 0.199 0.876 1.00 1900

gamma[109,1,2,1] 0.115 0.200 0.000 0.000 0.016 0.128 0.728 1.08 76

gamma[109,1,2,2] 0.885 0.200 0.272 0.872 0.984 1.000 1.000 1.01 1400

gamma[98,1,1,1] 0.664 0.310 0.032 0.417 0.761 0.948 1.000 1.01 2700

gamma[98,1,1,2] 0.336 0.310 0.000 0.052 0.239 0.583 0.968 1.01 1100

gamma[98,1,2,1] 0.473 0.328 0.001 0.158 0.478 0.773 0.988 1.02 410

gamma[98,1,2,2] 0.527 0.328 0.012 0.227 0.522 0.842 0.999 1.02 230

lambda.std[1] 0.773 0.123 0.454 0.716 0.804 0.860 0.930 1.10 45

lambda.std[2] 0.734 0.162 0.321 0.650 0.774 0.856 0.934 1.01 500

lambda.std[3] 0.850 0.072 0.661 0.822 0.866 0.899 0.938 1.03 220

lambda.std[4] 0.608 0.239 0.058 0.461 0.669 0.800 0.906 1.03 140

lambda.std[5] 0.530 0.239 0.053 0.353 0.549 0.733 0.897 1.08 52

lambda.std[6] 0.434 0.240 0.024 0.230 0.441 0.629 0.833 1.02 130

lambda.std[7] 0.392 0.224 0.017 0.205 0.391 0.578 0.781 1.01 400

lambda.std[8] 0.340 0.223 0.015 0.153 0.310 0.509 0.787 1.01 370

lambda.std[9] 0.658 0.172 0.229 0.565 0.695 0.788 0.889 1.02 250

lambda.std[10] 0.831 0.091 0.581 0.801 0.852 0.890 0.937 1.08 580

omega[109,1,2] 0.240 0.226 0.000 0.043 0.175 0.398 0.781 1.02 180

omega[98,1,2] 0.587 0.227 0.136 0.432 0.577 0.770 0.969 1.01 780

pi[109,1,2] 0.201 0.255 0.000 0.008 0.080 0.311 0.906 1.04 120

pi[98,1,2] 0.295 0.319 0.000 0.015 0.161 0.528 0.969 1.06 78

reli.omega 0.908 0.030 0.835 0.893 0.912 0.928 0.949 1.05 91

xi 1.298 0.142 1.002 1.185 1.325 1.420 1.493 1.54 9

deviance 3299.383 49.059 3201.655 3266.032 3300.368 3333.349 3394.330 1.08 36

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 1102.1 and DIC = 4401.4

DIC is an estimate of expected predictive error (lower deviance is better).Alt \(\lambda\) Prior B with Alt Tune D \(\xi G(1,1)\)

\[\lambda \sim N^+(0,5)\] \[\xi \sim Gamma(1,1)\]

jags.params <- c("lambda.std",

"reli.omega",

"gamma[109,1,1,1]",

"gamma[109,1,1,2]",

"gamma[109,1,2,1]",

"gamma[109,1,2,2]",

"omega[109,1,2]",

"pi[109,1,2]",

"gamma[98,1,1,1]",

"gamma[98,1,1,2]",

"gamma[98,1,2,1]",

"gamma[98,1,2,2]",

"omega[98,1,2]",

"pi[98,1,2]",

"xi")

jags.data <- list(

y = mydata[,1:10],

lrt = mydata[,11:20],

N = nrow(mydata),

nit = 10,

ncat = 2

)

# Run model

fit.alt_b_alt_d <- R2jags::jags(

model = paste0(w.d, "/code/study_4/model_4Bw_xi_gamma.txt"),

parameters.to.save = jags.params,

inits = jags.inits,

data = jags.data,

n.chains = 4,

n.burnin = NBURN,

n.iter = NITER

)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 2840

Unobserved stochastic nodes: 4588

Total graph size: 45399

Initializing modelprint(fit.alt_b_alt_d, width=1000)Inference for Bugs model at "C:/Users/noahp/Documents/GitHub/Padgett-Dissertation/code/study_4/model_4Bw_xi_gamma.txt", fit using jags,

4 chains, each with 10000 iterations (first 5000 discarded), n.thin = 5

n.sims = 4000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

gamma[109,1,1,1] 0.851 0.171 0.387 0.778 0.914 0.979 1.000 1.00 4000

gamma[109,1,1,2] 0.149 0.171 0.000 0.021 0.086 0.222 0.613 1.02 450

gamma[109,1,2,1] 0.134 0.160 0.000 0.013 0.071 0.200 0.558 1.06 130

gamma[109,1,2,2] 0.866 0.160 0.442 0.800 0.929 0.987 1.000 1.00 1800

gamma[98,1,1,1] 0.666 0.227 0.166 0.509 0.707 0.853 0.984 1.01 1800

gamma[98,1,1,2] 0.334 0.227 0.016 0.147 0.293 0.491 0.834 1.02 910

gamma[98,1,2,1] 0.379 0.228 0.026 0.194 0.354 0.549 0.850 1.00 3200

gamma[98,1,2,2] 0.621 0.228 0.150 0.451 0.646 0.806 0.974 1.01 1300

lambda.std[1] 0.756 0.142 0.372 0.696 0.788 0.855 0.927 1.10 150

lambda.std[2] 0.736 0.164 0.291 0.666 0.779 0.853 0.929 1.01 280

lambda.std[3] 0.872 0.065 0.711 0.846 0.886 0.915 0.951 1.03 200

lambda.std[4] 0.617 0.237 0.071 0.472 0.683 0.803 0.921 1.00 800

lambda.std[5] 0.502 0.219 0.070 0.341 0.523 0.676 0.854 1.02 520

lambda.std[6] 0.415 0.229 0.026 0.229 0.417 0.593 0.845 1.00 1000

lambda.std[7] 0.393 0.230 0.020 0.203 0.386 0.573 0.815 1.00 1000

lambda.std[8] 0.363 0.229 0.018 0.167 0.341 0.542 0.813 1.00 3400

lambda.std[9] 0.649 0.175 0.206 0.558 0.684 0.778 0.892 1.01 1000

lambda.std[10] 0.829 0.079 0.627 0.792 0.846 0.885 0.931 1.01 450

omega[109,1,2] 0.265 0.214 0.003 0.081 0.221 0.416 0.761 1.00 710

omega[98,1,2] 0.516 0.180 0.157 0.405 0.508 0.633 0.880 1.00 3100

pi[109,1,2] 0.209 0.263 0.000 0.008 0.089 0.328 0.919 1.00 870

pi[98,1,2] 0.364 0.341 0.000 0.030 0.279 0.675 0.988 1.02 680

reli.omega 0.908 0.028 0.838 0.893 0.914 0.929 0.948 1.01 390

xi 3.602 0.936 2.020 2.932 3.580 4.186 5.554 1.24 18

deviance 3475.578 54.040 3360.692 3440.348 3479.706 3514.033 3569.981 1.16 22

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 1254.5 and DIC = 4730.1

DIC is an estimate of expected predictive error (lower deviance is better).Alt \(\lambda\) Prior C with Base Tune \(\xi = 1\)

\[\lambda \sim N^+(0,5)\] \[\xi = 1\]

# Save parameters

jags.params <- c("lambda.std",

"reli.omega",

"gamma[109,1,1,1]",

"gamma[109,1,1,2]",

"gamma[109,1,2,1]",

"gamma[109,1,2,2]",

"omega[109,1,2]",

"pi[109,1,2]",

"gamma[98,1,1,1]",

"gamma[98,1,1,2]",

"gamma[98,1,2,1]",

"gamma[98,1,2,2]",

"omega[98,1,2]",

"pi[98,1,2]")

jags.data <- list(

y = mydata[,1:10],

lrt = mydata[,11:20],

N = nrow(mydata),

nit = 10,

ncat = 2,

xi = 1

)

# Run model

fit.alt_c_base <- R2jags::jags(

model = paste0(w.d, "/code/study_4/model_4Cw_xi.txt"),

parameters.to.save = jags.params,

inits = jags.inits,

data = jags.data,

n.chains = 4,

n.burnin = NBURN,

n.iter = NITER

)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 2840

Unobserved stochastic nodes: 4587

Total graph size: 45400

Initializing modelprint(fit.alt_c_base, width=1000)Inference for Bugs model at "C:/Users/noahp/Documents/GitHub/Padgett-Dissertation/code/study_4/model_4Cw_xi.txt", fit using jags,

4 chains, each with 10000 iterations (first 5000 discarded), n.thin = 5

n.sims = 4000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

gamma[109,1,1,1] 0.850 0.253 0.101 0.817 0.987 1.000 1.000 1.00 4000

gamma[109,1,1,2] 0.150 0.253 0.000 0.000 0.013 0.183 0.899 1.00 4000

gamma[109,1,2,1] 0.217 0.296 0.000 0.000 0.046 0.366 0.945 1.07 100

gamma[109,1,2,2] 0.783 0.296 0.055 0.634 0.954 1.000 1.000 1.02 590

gamma[98,1,1,1] 0.662 0.333 0.014 0.383 0.786 0.971 1.000 1.00 3400

gamma[98,1,1,2] 0.338 0.333 0.000 0.029 0.214 0.617 0.986 1.00 4000

gamma[98,1,2,1] 0.264 0.301 0.000 0.017 0.129 0.445 0.968 1.00 790

gamma[98,1,2,2] 0.736 0.301 0.032 0.555 0.871 0.983 1.000 1.01 1100

lambda.std[1] 0.386 0.170 0.042 0.263 0.402 0.517 0.666 1.01 520

lambda.std[2] 0.377 0.166 0.050 0.255 0.383 0.503 0.673 1.01 260

lambda.std[3] 0.466 0.169 0.091 0.354 0.490 0.593 0.738 1.04 82

lambda.std[4] 0.207 0.156 0.007 0.077 0.175 0.307 0.566 1.03 110

lambda.std[5] 0.226 0.151 0.012 0.103 0.203 0.330 0.561 1.00 1200

lambda.std[6] 0.222 0.147 0.010 0.104 0.204 0.320 0.549 1.00 1100

lambda.std[7] 0.211 0.146 0.008 0.092 0.188 0.306 0.553 1.01 230

lambda.std[8] 0.165 0.131 0.006 0.059 0.135 0.237 0.488 1.01 430

lambda.std[9] 0.357 0.165 0.039 0.235 0.366 0.481 0.649 1.02 260

lambda.std[10] 0.328 0.177 0.027 0.187 0.322 0.461 0.678 1.07 47

omega[109,1,2] 0.445 0.233 0.036 0.262 0.449 0.615 0.893 1.01 690

omega[98,1,2] 0.665 0.214 0.228 0.504 0.670 0.856 0.988 1.00 4000

pi[109,1,2] 0.537 0.303 0.027 0.272 0.547 0.815 0.995 1.01 1000

pi[98,1,2] 0.717 0.270 0.077 0.546 0.807 0.943 0.998 1.01 1100

reli.omega 0.514 0.103 0.293 0.448 0.522 0.591 0.686 1.07 43

deviance 3305.763 43.765 3222.727 3276.649 3305.116 3335.500 3390.118 1.01 210

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 944.6 and DIC = 4250.3

DIC is an estimate of expected predictive error (lower deviance is better).Alt \(\lambda\) Prior C with Alt Tune A \(\xi = 0.1\)

\[\lambda \sim N(0,5)\] \[\xi = 0.1\]

jags.data <- list(

y = mydata[,1:10],

lrt = mydata[,11:20],

N = nrow(mydata),

nit = 10,

ncat = 2,

xi = 0.1

)

# Run model

fit.alt_c_alt_a <- R2jags::jags(

model = paste0(w.d, "/code/study_4/model_4Cw_xi.txt"),

parameters.to.save = jags.params,

inits = jags.inits,

data = jags.data,

n.chains = 4,

n.burnin = NBURN,

n.iter = NITER

)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 2840

Unobserved stochastic nodes: 4587

Total graph size: 45400

Initializing modelprint(fit.alt_c_alt_a, width=1000)Inference for Bugs model at "C:/Users/noahp/Documents/GitHub/Padgett-Dissertation/code/study_4/model_4Cw_xi.txt", fit using jags,

4 chains, each with 10000 iterations (first 5000 discarded), n.thin = 5

n.sims = 4000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

gamma[109,1,1,1] 0.850 0.339 0.000 1.000 1.000 1.000 1.000 1.00 4000

gamma[109,1,1,2] 0.150 0.339 0.000 0.000 0.000 0.000 1.000 1.00 4000

gamma[109,1,2,1] 0.361 0.456 0.000 0.000 0.000 0.972 1.000 3.14 5

gamma[109,1,2,2] 0.639 0.456 0.000 0.028 1.000 1.000 1.000 1.37 13

gamma[98,1,1,1] 0.658 0.451 0.000 0.007 1.000 1.000 1.000 1.00 4000

gamma[98,1,1,2] 0.342 0.451 0.000 0.000 0.000 0.993 1.000 1.00 4000

gamma[98,1,2,1] 0.134 0.321 0.000 0.000 0.000 0.001 1.000 1.47 10

gamma[98,1,2,2] 0.866 0.321 0.000 0.999 1.000 1.000 1.000 1.35 27

lambda.std[1] 0.287 0.173 0.015 0.143 0.278 0.417 0.626 1.12 27

lambda.std[2] 0.318 0.167 0.023 0.187 0.318 0.440 0.645 1.04 65

lambda.std[3] 0.332 0.174 0.025 0.195 0.334 0.467 0.654 1.04 71

lambda.std[4] 0.179 0.140 0.006 0.066 0.147 0.265 0.512 1.01 340

lambda.std[5] 0.257 0.168 0.012 0.117 0.237 0.378 0.612 1.05 59

lambda.std[6] 0.230 0.149 0.010 0.104 0.215 0.337 0.546 1.00 3800

lambda.std[7] 0.200 0.139 0.008 0.082 0.179 0.295 0.500 1.01 380

lambda.std[8] 0.181 0.138 0.007 0.069 0.150 0.264 0.508 1.00 4000

lambda.std[9] 0.282 0.165 0.017 0.148 0.273 0.404 0.606 1.04 71

lambda.std[10] 0.237 0.155 0.010 0.109 0.219 0.339 0.577 1.03 120

omega[109,1,2] 0.396 0.314 0.000 0.073 0.369 0.674 0.950 1.62 10

omega[98,1,2] 0.836 0.225 0.204 0.758 0.946 0.997 1.000 1.12 34

pi[109,1,2] 0.714 0.287 0.095 0.495 0.793 0.993 1.000 1.21 17

pi[98,1,2] 0.854 0.215 0.193 0.796 0.957 0.998 1.000 1.18 29

reli.omega 0.420 0.113 0.196 0.345 0.427 0.500 0.626 1.04 75

deviance 2828.096 50.829 2727.386 2793.320 2829.475 2863.155 2925.655 1.54 9

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 796.5 and DIC = 3624.6

DIC is an estimate of expected predictive error (lower deviance is better).Alt \(\lambda\) Prior C with Alt Tune B \(\xi = 10\)

\[\lambda \sim N(0,5)\] \[\xi = 10\]

jags.data <- list(

y = mydata[,1:10],

lrt = mydata[,11:20],

N = nrow(mydata),

nit = 10,

ncat = 2,

xi = 10

)

# Run model

fit.alt_c_alt_b <- R2jags::jags(

model = paste0(w.d, "/code/study_4/model_4Cw_xi.txt"),

parameters.to.save = jags.params,

inits = jags.inits,

data = jags.data,

n.chains = 4,

n.burnin = NBURN,

n.iter = NITER

)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 2840

Unobserved stochastic nodes: 4587

Total graph size: 45400

Initializing modelprint(fit.alt_c_alt_b, width=1000)Inference for Bugs model at "C:/Users/noahp/Documents/GitHub/Padgett-Dissertation/code/study_4/model_4Cw_xi.txt", fit using jags,

4 chains, each with 10000 iterations (first 5000 discarded), n.thin = 5

n.sims = 4000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

gamma[109,1,1,1] 0.849 0.108 0.581 0.789 0.872 0.933 0.988 1.00 4000

gamma[109,1,1,2] 0.151 0.108 0.012 0.067 0.128 0.211 0.419 1.00 4000

gamma[109,1,2,1] 0.164 0.112 0.016 0.078 0.142 0.229 0.425 1.00 1200

gamma[109,1,2,2] 0.836 0.112 0.575 0.771 0.858 0.922 0.984 1.00 980

gamma[98,1,1,1] 0.667 0.144 0.364 0.571 0.677 0.776 0.912 1.00 2100

gamma[98,1,1,2] 0.333 0.144 0.088 0.224 0.323 0.429 0.636 1.00 1400

gamma[98,1,2,1] 0.315 0.140 0.087 0.207 0.304 0.403 0.614 1.00 3000

gamma[98,1,2,2] 0.685 0.140 0.386 0.597 0.696 0.793 0.913 1.00 4000

lambda.std[1] 0.370 0.173 0.044 0.239 0.382 0.506 0.679 1.00 3900

lambda.std[2] 0.365 0.165 0.051 0.245 0.371 0.490 0.658 1.01 390

lambda.std[3] 0.491 0.173 0.096 0.383 0.518 0.620 0.759 1.02 200

lambda.std[4] 0.231 0.172 0.010 0.093 0.196 0.338 0.645 1.00 1000

lambda.std[5] 0.221 0.154 0.009 0.096 0.198 0.322 0.571 1.01 390

lambda.std[6] 0.224 0.147 0.011 0.103 0.208 0.329 0.532 1.00 1300

lambda.std[7] 0.205 0.143 0.008 0.089 0.178 0.302 0.523 1.00 710

lambda.std[8] 0.159 0.122 0.006 0.061 0.133 0.232 0.452 1.01 450

lambda.std[9] 0.344 0.165 0.029 0.220 0.355 0.468 0.639 1.01 250

lambda.std[10] 0.380 0.183 0.034 0.241 0.393 0.524 0.689 1.01 240

omega[109,1,2] 0.510 0.210 0.110 0.351 0.518 0.669 0.880 1.00 1700

omega[98,1,2] 0.589 0.135 0.330 0.497 0.577 0.684 0.858 1.00 4000

pi[109,1,2] 0.537 0.302 0.026 0.279 0.539 0.808 0.995 1.00 1700

pi[98,1,2] 0.714 0.269 0.084 0.541 0.803 0.942 0.998 1.00 4000

reli.omega 0.524 0.104 0.300 0.457 0.534 0.599 0.702 1.01 910

deviance 3640.396 35.272 3568.698 3617.872 3640.999 3663.731 3707.740 1.01 250

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 615.0 and DIC = 4255.4

DIC is an estimate of expected predictive error (lower deviance is better).Alt \(\lambda\) Prior C with Alt Tune C \(\xi U(0.5,1.5)\)

\[\lambda \sim N(0,5)\] \[\xi \sim Uniform(0.5,1.5)\]

jags.params <- c("lambda.std",

"reli.omega",

"gamma[109,1,1,1]",

"gamma[109,1,1,2]",

"gamma[109,1,2,1]",

"gamma[109,1,2,2]",

"omega[109,1,2]",

"pi[109,1,2]",

"gamma[98,1,1,1]",

"gamma[98,1,1,2]",

"gamma[98,1,2,1]",

"gamma[98,1,2,2]",

"omega[98,1,2]",

"pi[98,1,2]",

"xi")

jags.data <- list(

y = mydata[,1:10],

lrt = mydata[,11:20],

N = nrow(mydata),

nit = 10,

ncat = 2

)

# Run model

fit.alt_c_alt_c <- R2jags::jags(

model = paste0(w.d, "/code/study_4/model_4Cw_xi_uniform.txt"),

parameters.to.save = jags.params,

inits = jags.inits,

data = jags.data,

n.chains = 4,

n.burnin = NBURN,

n.iter = NITER

)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 2840

Unobserved stochastic nodes: 4588

Total graph size: 45401

Initializing modelprint(fit.alt_c_alt_c, width=1000)Inference for Bugs model at "C:/Users/noahp/Documents/GitHub/Padgett-Dissertation/code/study_4/model_4Cw_xi_uniform.txt", fit using jags,

4 chains, each with 10000 iterations (first 5000 discarded), n.thin = 5

n.sims = 4000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

gamma[109,1,1,1] 0.850 0.232 0.160 0.788 0.973 0.999 1.000 1.01 2800

gamma[109,1,1,2] 0.150 0.232 0.000 0.001 0.027 0.212 0.840 1.00 1500

gamma[109,1,2,1] 0.209 0.268 0.000 0.005 0.076 0.337 0.887 1.01 290

gamma[109,1,2,2] 0.791 0.268 0.113 0.663 0.924 0.995 1.000 1.01 570

gamma[98,1,1,1] 0.663 0.308 0.032 0.426 0.752 0.944 1.000 1.00 2300

gamma[98,1,1,2] 0.337 0.308 0.000 0.056 0.248 0.574 0.968 1.00 2300

gamma[98,1,2,1] 0.274 0.276 0.000 0.038 0.176 0.451 0.922 1.00 910

gamma[98,1,2,2] 0.726 0.276 0.078 0.549 0.824 0.962 1.000 1.01 520

lambda.std[1] 0.395 0.165 0.050 0.278 0.413 0.522 0.675 1.00 660

lambda.std[2] 0.379 0.163 0.050 0.263 0.391 0.500 0.665 1.01 520

lambda.std[3] 0.481 0.165 0.107 0.380 0.503 0.602 0.746 1.02 370

lambda.std[4] 0.210 0.152 0.008 0.082 0.180 0.313 0.554 1.01 180

lambda.std[5] 0.231 0.152 0.012 0.107 0.208 0.337 0.553 1.00 4000

lambda.std[6] 0.232 0.152 0.012 0.108 0.211 0.338 0.557 1.01 370

lambda.std[7] 0.200 0.135 0.009 0.090 0.179 0.291 0.501 1.01 200

lambda.std[8] 0.161 0.130 0.006 0.058 0.131 0.232 0.483 1.00 1500

lambda.std[9] 0.359 0.166 0.039 0.234 0.371 0.484 0.647 1.01 380

lambda.std[10] 0.355 0.179 0.029 0.213 0.359 0.495 0.671 1.01 290

omega[109,1,2] 0.455 0.225 0.045 0.287 0.463 0.611 0.893 1.00 1700

omega[98,1,2] 0.650 0.202 0.236 0.502 0.644 0.822 0.981 1.00 4000

pi[109,1,2] 0.535 0.303 0.024 0.274 0.536 0.810 0.994 1.00 1000

pi[98,1,2] 0.714 0.273 0.064 0.533 0.810 0.942 0.999 1.01 1100

reli.omega 0.525 0.097 0.320 0.462 0.533 0.596 0.693 1.00 800

xi 1.384 0.093 1.165 1.330 1.406 1.461 1.496 1.35 13

deviance 3378.056 46.255 3282.773 3347.844 3378.460 3409.287 3465.528 1.05 57

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 1013.7 and DIC = 4391.7

DIC is an estimate of expected predictive error (lower deviance is better).Alt \(\lambda\) Prior C with Alt Tune D \(\xi G(1,1)\)

\[\lambda \sim N(0,5)\] \[\xi \sim Gamma(1,1)\]

jags.params <- c("lambda.std",

"reli.omega",

"gamma[109,1,1,1]",

"gamma[109,1,1,2]",

"gamma[109,1,2,1]",

"gamma[109,1,2,2]",

"omega[109,1,2]",

"pi[109,1,2]",

"gamma[98,1,1,1]",

"gamma[98,1,1,2]",

"gamma[98,1,2,1]",

"gamma[98,1,2,2]",

"omega[98,1,2]",

"pi[98,1,2]",

"xi")

jags.data <- list(

y = mydata[,1:10],

lrt = mydata[,11:20],

N = nrow(mydata),

nit = 10,

ncat = 2

)

# Run model

fit.alt_c_alt_d <- R2jags::jags(

model = paste0(w.d, "/code/study_4/model_4Cw_xi_gamma.txt"),

parameters.to.save = jags.params,

inits = jags.inits,

data = jags.data,

n.chains = 4,

n.burnin = NBURN,

n.iter = NITER

)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 2840

Unobserved stochastic nodes: 4588

Total graph size: 45400

Initializing modelprint(fit.alt_c_alt_d, width=1000)Inference for Bugs model at "C:/Users/noahp/Documents/GitHub/Padgett-Dissertation/code/study_4/model_4Cw_xi_gamma.txt", fit using jags,

4 chains, each with 10000 iterations (first 5000 discarded), n.thin = 5

n.sims = 4000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

gamma[109,1,1,1] 0.849 0.162 0.417 0.779 0.906 0.972 1.000 1.00 4000

gamma[109,1,1,2] 0.151 0.162 0.000 0.028 0.094 0.221 0.583 1.03 250

gamma[109,1,2,1] 0.173 0.173 0.001 0.037 0.116 0.259 0.615 1.02 330

gamma[109,1,2,2] 0.827 0.173 0.385 0.741 0.884 0.963 0.999 1.00 1900

gamma[98,1,1,1] 0.669 0.210 0.209 0.528 0.696 0.841 0.974 1.01 1900

gamma[98,1,1,2] 0.331 0.210 0.026 0.159 0.304 0.472 0.791 1.01 1100

gamma[98,1,2,1] 0.303 0.202 0.018 0.143 0.269 0.430 0.761 1.04 140

gamma[98,1,2,2] 0.697 0.202 0.239 0.570 0.731 0.857 0.982 1.00 750

lambda.std[1] 0.390 0.171 0.048 0.271 0.401 0.523 0.685 1.00 990

lambda.std[2] 0.374 0.166 0.049 0.258 0.377 0.497 0.675 1.01 3100

lambda.std[3] 0.486 0.167 0.111 0.377 0.516 0.615 0.737 1.01 1200

lambda.std[4] 0.212 0.150 0.009 0.086 0.186 0.316 0.540 1.01 470

lambda.std[5] 0.228 0.148 0.010 0.103 0.214 0.335 0.549 1.01 250

lambda.std[6] 0.222 0.151 0.011 0.101 0.201 0.320 0.554 1.00 2000

lambda.std[7] 0.203 0.140 0.008 0.088 0.182 0.296 0.522 1.00 890

lambda.std[8] 0.168 0.133 0.006 0.063 0.136 0.247 0.490 1.00 670